Jon Krohn: 00:00:00

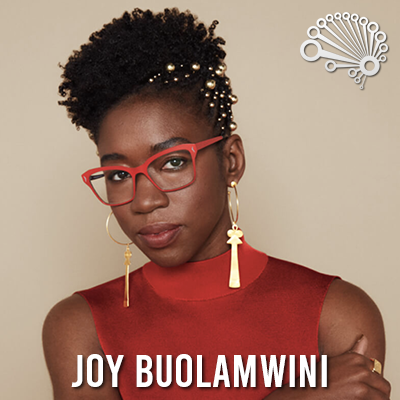

This is episode number 727 with Dr. Joy Buolamwini, President of The Algorithmic Justice League. Today’s episode is brought to you by Gurobi, the decision intelligence leader, and by CloudWolf, the Cloud Skills platform.

00:00:18

Welcome to the Super Data Science Podcast, the most listened-to podcast in the data science industry. Each week we bring you inspiring people and ideas to help you build a successful career in data science. I’m your host, Jon Krohn. Thanks for joining me today. And now let’s make the complex simple.

00:00:49

Welcome back to the Super Data Science Podcast. I’ve got a superstar in my midst today with Dr. Joy Buolamwini. She has so many enormous achievements I really struggled to pair them down for you for this intro. Well, here’s my best shot. Joy, during her PhD at MIT, her research uncovered extensive racial and gender biases in the AI services of big firms like Amazon, Microsoft, and IBM. The coded bias documentary that she stars in and that follows this research has a crazy 100% fresh rating on Rotten Tomatoes. Her TED Talk on algorithmic bias has over a million views. She founded the Algorithmic Justice League to create a world with more equitable and accountable technology. She’s been recognized in so many different lists and with so many awards, including the Bloomberg 50, the Tech Review 35 Under 35, the Forbes 30 under 30, Time magazine’s AI 100, and she was the youngest person included in Forbes Top 50 Women in Tech. In addition to her MIT PhD, she also holds a Master’s from the University of Oxford, where she studied as a Rhodes Scholar and she holds a Bachelor’s in Computer Science from Georgia Tech.

00:01:56

Today’s episode, if it’s not obvious already, should be fascinating to just about anyone. In this episode, Joy details the research that led to her uncovering the startling racial and gender biases in widely used commercial AI systems. She talks about how firms reacted to her discoveries, including which big tech companies were receptive and which ones were disparaging. She fills us in on what we can do to ensure our own AI models don’t reinforce historical stereotypes, and she fills us in on whether she thinks our AI future will be bleak or brilliant.

00:02:28

Finally, Joy’s new book, Unmasking AI, was released this very day. To celebrate I will personally ship 10 physical copies to people who, by Saturday November 4th, share what they think of today’s episode on social media. To be eligible for this giveaway please do this by commenting and/or re-sharing the LinkedIn posts that I publish about Joy’s episode from my personal LinkedIn account today. I will pick the 10 book recipients based on the quality of their comment or post.

00:02:56

All right, you ready for this magnificent episode? Let’s go. Dr. Buolamwini, awesome to have you on the show. Thank you for joining us. Where are you calling in from today?

Joy Buolamwini: 00:03:11

I’m calling in from Cambridge, and I have to clarify it’s Cambridge, Massachusetts, because I went to Oxford University as I think you did as well. The other Cambridge. I’m still loyal. Still loyal.

Jon Krohn: 00:03:23

Exactly. Recently I learned through, because I posted online last week that I’d be interviewing you and I put in as many accolades as I could cram into a single social media post, and I asked people for questions. We did get some audience questions, so we’ll get into those later. But I also learned through a fellow St. Gallen Symposium alumnus that you are a symposium alumna as well.

Joy Buolamwini: 00:03:51

I am. Who was the fellow connector?

Jon Krohn: 00:03:55

Glorian Feltus.

Joy Buolamwini: 00:03:57

Yes. And they’re in the AI space so we’ve been in touch through that connection ever since then.

Jon Krohn: 00:04:04

Actually, I spoke virtually at his Zordify conference, Zordify PWC conference in Luxembourg last week at the time of recording.

Joy Buolamwini: 00:04:15

Oh, nice.

Jon Krohn: 00:04:15

There you go. I’m pressuring you on air now, maybe we can get you to speak at an event. I’m hosting for… The St. Gallen alumni community of US and Canada, I’m the president of that chapter.

Joy Buolamwini: 00:04:32

Oh, really?

Jon Krohn: 00:04:33

Yeah. We’ve got our first event coming up in New York in… Well, it’ll actually be over, it’s in October, so by the time this episode is out, it will have happened. But I can keep you in the loop on those things. The symposium is amazing.

Joy Buolamwini: 00:04:50

Where will it be? You said in New York?

Jon Krohn: 00:04:53

That’ll be in New York.

Joy Buolamwini: 00:04:55

You never know. I’m back and forth in New York quite a bit in October, so we’ll see.

Jon Krohn: 00:04:58

Nice. But we can let you know about… We could set up a future event around you.

Joy Buolamwini: 00:05:03

Interesting. Cool. That would be fun. It’s a great community.

Jon Krohn: 00:05:07

It’s an awesome community. I’ve talked about it on air before. We have too much to talk about related to you though to get into the symposium too much in this episode. Our listeners might know you from the 2020 critically claimed documentary, it has 100% on Rotten Tomatoes, it’s called Coded Bias.

Joy Buolamwini: 00:05:25

It’s 100% rotten. I don’t know what that really means.

Jon Krohn: 00:05:29

No, it’s 100% fresh.

Joy Buolamwini: 00:05:30

100% fresh, that’s what it means.

Jon Krohn: 00:05:35

0% rotten.

Joy Buolamwini: 00:05:38

100% fresh.

Jon Krohn: 00:05:40

And so it’s about AI bias and it’s about your Algorithmic Justice League, which advocates for equitable and accountable AI. And you also have an upcoming book, which it’s being released today. We are releasing this episode to coincide with the release of your new book, Unmasking AI. That means that today is Halloween, it’s October 31st, which is the perfect date to release a book about unmasking. Yes. And Joy’s just returned from Venice, so she’s got some great Venice masks and the classic mask from Coded Bias. If you’re watching the video version, you’re getting all that happening in real time. Your book, Unmasking AI, it’s a compelling book. It tells your journey from AI enthusiastic, to critic, to activistic chronologically in five parts. And so we’re going to begin this interview eight years ago when you were a graduate student at MIT’s famous Media Lab. Before Coded Bias, there was Coded Gaze. How did this term come to be and how has it affected your perspective regarding AI?

Joy Buolamwini: 00:06:57

Oh, that’s such a great question. When I was a graduate student, oh, so long ago, maybe not that long ago, but when I was a graduate student early on at the Media Lab, I took this class called Science Fabrication, and the idea was to read science fiction and create something inspired by the literature as long as you could build it in six weeks. And I wanted to shift my body. Shape-shifting. Pretty cool concept, we’ve seen in a many different iterations. But I figured I wouldn’t be changing the laws of physics anytime soon, so I probably needed to get more creative. Instead of changing my physical form, I thought, what if I could augment my reflection in a mirror? And so in exploring that, I learned about this material called half silvered glass. And given its material properties, if it’s black on the background it will reflect the light so it will behave like a mirror, and if there’s light, that light will shine through.

00:07:57

I used that and created this contraption I called the Aspire Mirror because you could aspire to be what you wanted to be projected. Long story short, I got it working where I could put the face of a lion on my reflection if I wanted, or one of my favorite athletes at the time, Serena Williams. Now that she’s retired, still a fave, I’m liking Sha’carri Richardson out here with the 100 meters, killing it. Also very inspired by Coco Gauff, et cetera. Little Steph Curry as… Anyways, you put whoever you want, whoever’s your aspiration. As I’m working on this, I thought, okay, I figured out the first part.

00:08:35

Second phase, can I have that mask or that image follow me in the mirror? I thought it would be cooler. That’s what led me to downloading some computer vision packages offline. And what I found was what I incorporated into my code didn’t work that well. The libraries I downloaded were not optimized for my face. And I was curious as a scientist, is it just my face, is it the reflection, is it shadows? Is it the angle, is it the pose? What is actually happening? And so that’s what led me to start exploring this question of, might there be a coded gaze building on concepts like the male gaze or the white gaze, which reflects those who have the power to shape priorities or to say what’s worthy. And so that term coded gaze came from the experience of coding literally in a white mask, having to fit a norm that didn’t quite reflect me in order to be rendered visible. And it builds a little bit. There’s a book up here, but there’s glasses on top, so I’m going to have to pick it carefully, very carefully.

00:09:59

When that was happening, there’s this book called Black Skin White Masks. It was too… I was, wait, I couldn’t script this better. And I happened to have the white mask because it was around Halloween time. Here we are with the book coming out on Halloween, coming back to that really defining moment for me, coding in white face at MIT, the epicenter of innovation. Oh, oh, I have questions. What’s going on? Is it just my face or is there something more? And so that’s what ends up leading to what you read about in the book.

Jon Krohn: 00:10:38

And so it led to the discovery, or at least it entered the public realm that the AI services of big firms like Amazon, Microsoft, IBM, they all had these issues.

Joy Buolamwini: 00:10:56

Yeah, so after playing around with my Aspire Mirror, I then started to learn that beyond face detection or face tracking, you have facial recognition technologies being used for identification by police departments. And then I started noticing that some of these systems also would try to guess your gender, gender classification. And so once I had a sense of different types of facial recognition task, I decided to focus on the gender classification systems of the companies that you mentioned. And it wasn’t just my face, it turned out what I was observing was actually a broader pattern and that became the basis for my master’s thesis at MIT. That’s where it started.

00:11:45

And so it showed that there was gender bias, yes. The systems overall work better on male labeled faces than female labeled faces, but it also showed phenotypic bias. And I say phenotypic bias intentionally because the way that the dataset, the Pilot Parliaments Benchmark that was created for this research was labeled was by the Fitzpatrick Skin Type Classification. And so I worked with a board certified dermatologist who I had met through the Rhodes Scholar Network. She was from South Africa, but had relocated to the Massachusetts area. We met at the 40th Anniversary of Women Rhodes Scholars. I mentioned my work. She said, we should be in touch. I remember visiting her home and she has a bunch of kids. They had different skin types, which all looked white to me.

00:12:43

And so she was pointing out the differences and that was actually fascinating because we got into a broader conversation about a bias in health and healthcare and also skincare. Even the Fitzpatrick Skin Type Classification itself, in the ’70 when it came out it had four classifications. I would say three categories of what will likely be perceived as white and the fourth category was for everyone else in the world.

Jon Krohn: 00:13:16

Oh, my goodness.

Joy Buolamwini: 00:13:20

The whole world. The global majority wasn’t reflected. They later expanded it to have three categories instead of that one category for darker skin and so you ended up with six. Already it was a limited classification system, but it was also the least racist of the various skin type classification systems that were out there. This one was coming from dermatology, but you also had others coming more from anthropology. And so sometimes people would associate characteristics with particular skin classes, which still happens. Welcome to racism. Which means that phenotypic features and classifications definitely map onto demographics and cultural and social categories for sure. I’m specific that we labeled it by Fitzpatrick because that was the phenotypic approach, which was a contribution of that research. And that also then maps on to race, but not in a clear cut way as it would be.

00:14:31

Because the other thing I looked into was within a particular racial category, you would have a lot of intra-class variation. You might have somebody, Taye Diggs who’s really dark skinned, and Mariah Carey. Barack Obama, Michelle Obama, they both identify as black, and they have different skin types, different skin tones. That was the other thing I learned, there was a difference between skin type and skin tone. And you mentioned having a more technical audience here, so yay, can I nerd in a little bit on this?

Jon Krohn: 00:15:06

Yes, please.

Joy Buolamwini: 00:15:07

Yes. When it comes to skin color and skin type, skin type is the skin’s response to UV radiation, sunlight. Are you going to burn? Are you going to tan? Are you going to do something in between? Just because you have a farmer’s tan doesn’t mean you have a different skin type, but you do have different colors. And so that’s why, depending on the audience, I might be really specific to say that we labeled it by the skin type and not the skin tone.

Jon Krohn: 00:15:42

Got it, got it, got it.

Joy Buolamwini: 00:15:42

And it can vary depending on which skin patch you’re looking at, its exposure to elements or hard work, all kinds of various things and also what happens when there’s aging and photo aging and all the great things that can impact the skin. I also learned a lot more about skin because along my path I started working with Olay, with Procter and Gamble. And so they focus on skincare and they have really interesting imaging technology where you can even see beneath the surface of the skin. There’s just so much fascinating elements about skin by itself. And so I started with this art project to look like Serena Williams, maybe now Sha’carri Richardson, Coco Gauff. And next thing I know, I’m learning about skin classification systems in order to do this research.

Jon Krohn: 00:16:49

Gurobi Optimization recently joined us to discuss how you can drive decision-making, giving you the confidence to harness provably optimal decisions. Trusted by 80% of the world’s leading enterprises, Gurobi’s cutting-edge optimization solver, lightweight APIs and flexible deployment, simplify the data-to-decision journey. Gurobi offers a wealth of resources for data scientists, webinars like a recent one on using Gurobi in Databricks, they provide hands-on training, notebook examples and an extensive online course. Visit gurobi.com/sds for these resources and exclusive access to a competition illustrating optimizations value with prizes for top performers. That’s G-U-R-O-B-I.com/sds.

00:17:33

Very cool and interesting to read about the skin tone versus skin type thing. To make sure I got that technical term right, my skin type is always the same no matter what. But I just returned from two weeks where I was outdoors a lot in a bathing suit so now my skin tone is different on my face versus my really untanned butt.

Joy Buolamwini: 00:18:01

I will not comment on the skin variations on your body, but yes-

Jon Krohn: 00:18:10

That’s the idea.

Joy Buolamwini: 00:18:11

… your skin type has not changed.

Jon Krohn: 00:18:14

Right, right, right. Got you.

Joy Buolamwini: 00:18:15

The appearance of your skin that was exposed to sun has.

Jon Krohn: 00:18:22

This skin type issue of pale males, they form a lot of the training data sets for machine vision algorithms. And with your initial research, it was a lot of machine vision stuff, but you know a lot more than I do, but it seems like these pale male data sets are predominant not only in machine vision, but in a lot of the training data sets for machine learning?

Joy Buolamwini: 00:18:52

Yes. I will say, especially in the time period we’re talking about. When this research was coming out, I submitted my thesis in 2017, the published paper came out early in 2018. There have since been some efforts to diversify different types of data sets. Not all of those efforts I would necessarily condone. I think Google came under fire for bringing in a subcontractor that was soliciting images from homeless people to diversify their data sets. I believe there was a company called Cloud Walk, double check me fact-checkers, please, that was being integrated in Zimbabwe, presumably to diversify their data sets as well.

00:19:51

And so I think we are in a situation where the data sets that have been collected that are readily available reflect what I call power shadows. For example, when we were looking at face data sets, many of those data sets were of public figures. If you look at politicians to be public figures, then you look at who holds political power. Newsflash! Men. It wasn’t so surprising that if you were using data sets that were reflecting people who held political power, that you would have an over-representation of men relative to the global population. Then where is the pill happening? Where are we getting these images from? We’re getting these images from online from different media sources. And so then you also ask who is featured in the media? Who has more airtime?

00:20:51

And since we’re talking to a more technical audience, another factor that came into this is because so much of the data scraping that happens is automated, the face detector that’s used to determine if an image online has a face in it, those face detectors themselves also had a bias against dark skin. Even if you did have the images with darker skin public figures available, they could have been missed by the face detector. You have these layers and cascades of bias so that by the time you get to your handy dandy little dataset for what you might be doing, it’s inherited all of these power shadows. You have the power shadow of the patriarchy, you have the power shadow of white supremacy, and all you thought is you ran a little crawler and a scraper. You weren’t even thinking about any of these per se. It was more so convenience sampling, which as somebody who did computer science degrees, et cetera, this is literally how it was done. There was no intent to be harmful.

Jon Krohn: 00:22:04

These power shadows, if we naively collect our machine learning training data sets, we’ll end up recapitulating these historic power shadows in our modern machine learning models and hail and mail.

Joy Buolamwini: 00:22:22

And also makes them worse. There was a recent Bloomberg data investigation piece that came out. They said it was inspired by the gender shades research, and what they did is they had AI image generators generate people’s faces based on prompts. What does a CEO look like? What does a social worker look like? What does a criminal look like? And they did all of these explorations, and what they found is the systems didn’t just replicate the biases in society, they made them worse. It entrenches the stereotype because you’re doing it probabilistically. That’s even further from where we are because sometimes I do hear, well, society’s biased, it’s reflecting society. It’s amplifying the bias of society.

Jon Krohn: 00:23:17

Wow, I actually didn’t know that. It’s obvious to me now that you say it. And I assumed that it was an issue of, obviously we have these historical biases. And I don’t even know why someone would make that argument to say, well, it’s just representing these existing historical biases. Obviously that’s a problem in and of itself, but it makes sense to me, given what I know about the way that machine learning model is trained, that in this probabilistic way it’s entrenching and amplifying the biases, not just recapitulating these unwanted biases.

Joy Buolamwini: 00:23:53

I think to your point, when you think it out, it makes sense, but it’s not immediately obvious.

Jon Krohn: 00:24:01

It has never occurred to me, and it is the kind of thing that I’m thinking about literally every day because my machine learning company finds people for jobs, and so we need to be certain that it is not favoring some group over another. Because it’s a nurse role, it shouldn’t favor female applicants, because it’s a firefighter role it shouldn’t favor male applicants, for example. Even though in historical data, those genders are overrepresented in those particular occupations. Over the course of this research that you’ve been doing, you’ve worked closely with a lot of other researchers like Timnit Gebru. How have these kinds of collaborations and partnerships influenced your journey in shedding light on AI advisees?

Joy Buolamwini: 00:24:53

What it means when I make things like the shield, I tend to make multiple. This one is for Timnit-

Jon Krohn: 00:25:00

I didn’t expect another one.

Joy Buolamwini: 00:25:01

… notice the skin type, and this one is for me [inaudible 00:25:06].

Jon Krohn: 00:25:01

Oh.

Joy Buolamwini: 00:25:05

If you go to gs.ajl.org, gendershades.ajl.org, you’ll see us with our respective shields. Other than excuses to make swag for my various collaborators, part of it has been having a sense of community, particularly as somebody coming from a marginalized position in computer science. I remember when I was at MIT and I shared I wanted to do this work, people didn’t stop me, but it was, “Why would you spend… Go for it kid,” but not necessarily, “Okay, this sounds like a very promising area of research.” I was, we’ll see. And so meeting Timnit around that time and her having that computer vision background was a lot of fun for me because one, she helped me refresh my knowledge. So I remember this project she gave me to do, which was basically to create just a quick web app that would make it really easy to go through various data sets, various face data sets. So that was fun, chopping it up technically. But then, when it came to, for example, submitting one of my first papers in this area, I had done some other work in public health. So different hat, different time, new hat, this area. She’s the one who would handle communicating with Reviewer Number Two. Meanwhile I’m like, “Why don’t they understand, the Fitzpatrick scale is the point.” [inaudible 00:26:49] like, “All right, all right. I’ll communicate with diplomacy.” So, I learned a lot of diplomacy actually from working with Dr. Gebru for sure.

00:27:02

Also, because she was ahead of me, she could give me perspective settings. So, I’d be upset about one thing or the other. And she’s like, “You know what? In the broader scheme of things, you’re actually in a really good position.” So, I found that to be really helpful. Also, senior scholars like Dr. Safiya Noble, and I’m not sure if you’ve seen the Rolling Stone feature where Timnit, Safiya Noble, Dr. Rumman Chowdhury, Seeta is there as well. You have to check this out. This is why I got the guitar. I needed a reason to, I’ve always wanted a Les Paul, and you know, degrees in a life happen. So when we had this epic Rolling Stone feature where it says they try to warn us, and you have this sisterhood of very highly competent women, we’re all doctors, all together. I thought, “What a way to commemorate this. It is Rolling Stone after all.” So I went to my local guitar center and I got my nice little Les Paul Gibson with the Appetite Burst/…

Jon Krohn: 00:28:16

I’ll be sure to include that in the show notes. So yeah, that’s something that if you are one of the viewers that watches our video version, which is a minority of you, you will have seen that I have a guitar in my background there all the time and I’ve even, I’ve played that in some episodes. But so, Joy was showing me before the episode started that she had a brand new Les Paul that was looking very cool. And…

Joy Buolamwini: 00:28:43

… I’ll go get it right now. One sec. Celebrating, back to your point, collaborators.

Jon Krohn: 00:28:50

…Yeah.

Joy Buolamwini: 00:28:51

So this is why I like having collaborators.

Jon Krohn: 00:28:54

[inaudible 00:28:54] this beautiful Les Paul. And then in addition, before we started talking about guitars, we had another thing that our audio only listeners won’t be able to appreciate, is that Joy has these AJLs, so Algorithmic Justice League shields and they’re color coded to members of the AJL. I didn’t expect her to have Timnit, Dr. Gebru’s on the floor right next to her, but she’s got a bunch of AJL shields, including one behind her throughout the recording here. And so, we should talk about the AJL. So, what prompted you to establish it? I guess we kind of got a sense of that already now, because it sounds like this idea of community and having people who have been ahead of you on some things, maybe people who can respond diplomatically to Reviewer Number Two. What are the primary objectives of the Algorithmic Justice League and why did you establish it?

Joy Buolamwini: 00:29:53

Sure. So it has evolved. At first I thought it sounded cool, like Algorithmic Justice League, I want to be part of that. And I thought it was a way of putting an umbrella over the type of research I was doing at the media lab. So with the algorithmic auditing, showing bias in models from tech companies. But AJL is bigger than the research and it went beyond the research because it focused also on the storytelling. So when you see that origin story in the film Coded Bias, we are focused on how do we elevate the X coded, those who are harmed by AI systems? How can we prevent the development of harmful AI? And so that’s what we focus on at the Algorithmic Justice League, preventing AI harms and increasing accountability in the use of AI systems.

00:30:47

Also, I wanted to have a lot of fun. And so, we do all kinds of playful collaborations. One of my favorites, and you’ll see this with the book launch, is with our senior advisor, Dr. Sasha Costanza-Chock, the author of Design Justice, and used to be our director of research and design at AJL. And it’s also a music producer and musician of many kinds. So, they created the beats for facial recognition blockers, FRBs under their agent name. We have agent names, again, we like to have fun. So Agent Splice on SoundCloud.

Jon Krohn: 00:31:31

What’s your agent name?

Joy Buolamwini: 00:31:32

I have too many. So currently I’m Dr. Justice.

Jon Krohn: 00:31:37

Nice.

Joy Buolamwini: 00:31:38

Yeah, I’ll stick to Dr. Justice for today. And then, as people work on various projects with AJL, usually they’ll graduate with the AJL agent name, and all full-time folks have agent names. So AJL agents, they know who they’re, they’re out there. They might be in a company you know.

Jon Krohn: 00:31:58

All right. Data Science and Machine Learning jobs increasingly demand Cloud Skills—with over 30% of job postings listing Cloud Skills as a requirement today and that percentage set to continue growing. Thankfully, Kirill & Hadelin, who have taught machine learning to millions of students, have now launched CloudWolf to efficiently provide you with the essential Cloud Computing skills. With CloudWolf, commit just 30 minutes a day for 30 days and you can obtain your official AWS Certification badge. Secure your career’s future. Join now at cloudwolf.com/sds for a whopping 30% membership discount. Again that’s cloudwolf.com/sds to start your cloud journey today.

00:32:44

So it sounds like they would be doing a lot of good if they are. So, you talked about preventing harms and elevating the X coded. So far in the episode we’ve only talked about machine vision, facial recognition specifically, and that is a prominent example and it’s covered in a lot of detail in the Coded Bias film, for example, which I highly recommend to folks if they haven’t checked it out already, it is on Netflix. And yeah, other than that facial recognition example, you have found many examples of algorithms that have harmful effects and that the AJL can be tackling. So, could you share more about the potential consequences of these kinds of algorithms in fields like employment, finance, law enforcement?

Joy Buolamwini: 00:33:32

Absolutely. When I think of the law enforcement piece of it, I go to, I think of the school to prison pipeline. Where you get caught up in the criminal justice system or criminal law system at a very early age. So one thing we’ve been seeing is the adoption of first e-proctoring tools during COVID. You’re taking the test remotely, we want to see if it’s actually you. So this might be looking at people’s keystrokes. It could use a machine vision as well. But now that you have AI detectors or supposed detectors, some of the harms reports we get are people who have been wrongfully accused of cheating. So their academic integrity is being questioned and now the onus is on them. Not only did you have to prepare for the stressful final or whatever else it was, and some people setting up contraptions just to have their faces lit to be seen, to take the test, now you have to prove that you actually created what you made.

00:34:50

And some of what we’ve been seeing are that students with English as a second language, going back to the bias of many models, English tends to be the language that is “the default” or the norm despite that not being the case all around the world. But it is the lingua franca for the most part of machine learning and AI. And so, here you’re in a case of a problem created by AI with then a potential AI “solution” leading to more problems. And part of that is an issue of confirmation bias. So if you’re from a marginalized community or community that’s already assumed to be engaged in criminal activity or to have lesser character, when you are given the stigma of cheater etc., it’s more likely to be believed than if you are in a different group. So I think that’s an area where, again, along that pipeline of criminal justice, it can start with an accusation of cheating. But also, you have many companies using the goal of preventing fraud as the motivation for adopting invasive surveillance technologies that are fueled by AI. More on the crime side of things. So you have voice clones, AI voice generators, and a very rich data set of so… I mean, we got podcasts. My voice is out there. Your voice, how many, you said Episode 727?

Jon Krohn: 00:36:38

Yeah, your episode will be 727.

Joy Buolamwini: 00:36:41

Yeah, your voice is out there. And so, now people are able to create AI voice clones that are part of scams. And so, in the introduction of the book, I write about one woman who gets a call, she hears her daughter, “These bad men have me, help.” She’s sobbing. It’s a high stress situation. Another voice comes on demanding money. Eventually she’s able to see that her daughter’s safe on the trip that she thought her daughter was on. But it’s one of those examples that is growing. And it’s also preying on your vulnerability, because it’s the voice of your loved one. It can also be preying on your money. So the biometrics that were meant to add an added layer of security for, let’s say accessing your finances, it can be compromised with biometrics. And so, that’s another area to consider.

00:37:40

Something else, I have the guitar out, we were talking a little bit about the playfulness and the storytelling pieces of AJL. We definitely are a place that supports and welcomes artists. And so, another issue with AI is the process of creation. So if the process of creation entails gathering data without permission and without compensation and then profiting from that. We’re also in a place, I don’t yet have a term, it’s not… I want a term for AI that’s like blood diamonds. That gives you a sense the way in which it was produced is problematic to say the least. I don’t quite have that term, maybe dirty AI, I don’t know it just yet. And so, I reflect on this in the book about my own questions when I was taking faces off the internet. I am implicated in these processes. I was doing it in a way that was protected based on the BU law clinic review of my research processes for fair use, I wasn’t putting a company on top of it and selling it. But nonetheless, I was running into these ethical questions. Is it okay for me to take the faces of these people that are online? And some of the laws said yes, because they are public figures. Or some of the laws said yes because we allow the use of government data for research.

00:39:14

But something I couldn’t quite figure out, was when you had to do IRBs, Institutional Review Boards so you don’t end up doing really unethical things based on the past, I found that computer vision research got a complete pass. So when it came to human subjects research, if I’m going to be interviewing people, you have to go through all of these checks to ensure that you’re doing the research in an ethical manner. When it came to collecting people’s biometric data, which I thought would be classed under medical data, I had a pass because it was computer vision research. The researchers around me, they want their pass too. So by asking these questions, I realized if I really push on this, I’m going to make it harder for myself and for other researchers if you actually have to do deeper, if you can’t get the exemption. But I was asking myself, “Should we be getting this exemption? This is highly sensitive data.”, and you can’t anonymize a face.

Jon Krohn: 00:40:25

Right. [inaudible 00:40:27]

Joy Buolamwini: 00:40:26

In that if I have the photo of your face, it is not anonymous.

Jon Krohn: 00:40:31

Yeah, yeah, yeah.

Joy Buolamwini: 00:40:32

There are other steps you can take beyond that, but that was the question I had and I kind of got that sharp elbow to keep it moving. So I grapple a lot with the processes I critique having also learned those processes and use those processes in my own evolution as a computer scientist and an AI researcher and then realizing things you did maybe eight, 10 years ago wouldn’t necessarily be the way you would do it now with a deeper understanding.

Jon Krohn: 00:41:06

Mm-hmm. Yeah, those are all really good examples of use cases where we have issues, certainly the bias that we see in misidentifying people cheating on tests, all the way through to misidentifying them for crimes and how these are more likely to impact X coded people, as you described them. All the way through to the profiting from others’ art that algorithms can do today. On the X coding note, your TED Talk about the coded gaze made you a leading voice in AI bias discussion and you would use that platform to raise awareness and propose solutions like the incoding movements. We’ve talked about people who have been elevating the X coded. I guess that’s kind of a synonym, it’s just the inverse of the incoding movement. Or do you want to elaborate on the incoding movement and why this pillar could lead to more equitable technology development?

Joy Buolamwini: 00:42:14

Oh, yes. No, that’s a great question. So, I believe I recorded that talk in the fall of 2016, and so I had more of an engineering hat on. “All right. We got PAL mail data, let’s diversify the data set.” If people are being excluded, let’s include them. But even then, I remember I wrote this Medium, Medium at the time was a up-and-coming writer’s platform, right? So I wrote a medium article grappling with what are the costs of inclusion and what are the cost of exclusion? So in the TED Talk 2016, I was thinking of an incoding movement, and as my understanding evolved, I realized the answer isn’t always to include people. And so, when we are seeking justice, it’s about agency, it’s about rights, the right not to be included. So their cost of exclusion, right? So let’s say you’re excluded from a data set for… A class of people are excluded from a data set of pedestrian tracking algorithms. “Yeah, people are excited about the future of autonomous vehicles.”, maybe not everyone.

00:43:28

And from our shared academic institution, Oxford University, there’s actually, I remember reading this pre-release where they had looked at heights, and people who are shorter were more likely to be missed and hence not detected, right? Short people like me, children, others, that’s a concern. Georgia Tech researchers looked at a skin type, they used Fitzpatrick in that case, and they found, “Oh, look at that, the darker and more likely to be hit.” And also when you’re thinking about computer vision, you also want to think about ableism. So, many of these data sets are created assuming somebody’s going to be walking on two feet, which is not always the case. So, there are all of these factors to consider.

Jon Krohn: 00:44:25

Mathematics forms the core of data science and machine learning. And now with my Mathematical Foundations of Machine Learning course, you can get a firm grasp of that math, particularly the essential linear algebra and calculus. You can get all the lectures for free on my YouTube channel. But if you don’t mind paying a typically small amount for the Udemy version, you get everything from YouTube plus fully worked solutions to exercises and an official course completion certificate. As countless guests on the show have emphasized, to be the best data scientist you can be, you’ve got to know the underlying math. So check out the links to my Mathematical Foundations and Machine Learning course in the show notes or at jonkrohn.com/udemy. That’s jonkrohn.com/U-D-E-M-Y.

00:45:09

Yep, very well said. And a great summary of some of the things that we absolutely need to be considering as we develop algorithms. I want to dig more into your research in a moment. But just before we do that, I’ve got a question for you here about how your particular background might have shaped your perspective on technology. So this is right from chapter one of your book. So you’re multicultural, you’re born in Canada, although it sounds like you didn’t… I was trying to talk to you about Canada before we started recording, where I’m from. But yeah, you were raised in Mississippi and you have connections to Ghana. You also have an artist mother, a scientist father. So a lot of different perspectives. How did all of that affect your own perspective on technology?

Joy Buolamwini: 00:46:03

I think going back to this notion of the cost of inclusion and the cost of exclusion, being from a marginalized identity. So as somebody in computer science, right? There weren’t a lot of people who look like me in the classes that I would take, or if I would, any space I would show up, I just wasn’t necessarily the norm. And I got used to that, so much so that I thought it didn’t really matter. And then I remember the film Hidden Figures came out, which talks about the space race and the Black women mathematicians who calculated the trajectories for John Glenn and all of these astronauts.

Jon Krohn: 00:46:48

Such a good movie.

Joy Buolamwini: 00:46:50

Right?

Jon Krohn: 00:46:51

Love it.

Joy Buolamwini: 00:46:52

And I remember watching it in grad school. I was at MIT, they were doing a screening. So I had an opportunity to meet the author and she-

Jon Krohn: 00:47:01

Wow.

Joy Buolamwini: 00:47:01

… did a talk and all of that. And I remember watching it, and there’s a moment where the women come together, they’re like marching in. It’s the squad goals moment, where the east computing women and the west computing, they’re all coming together. And I was just so moved by that experience and it reminded me of the first time I went to the Grace Hopper Conference, which is a conference for, largest conference for women in tech. I think well over 15,000 participants now from the Anita B. Foundation. And just being surrounded by so many women, I’d almost forgotten what it was like. I was like, “Oh, this is kind of nice.” But it reminded me of what was missing.

00:47:51

And so, I think that sometimes can happen when we’re creating tech, we’re so used to it being a particular way that cost of exclusion is, we don’t even know what we’re missing. The cost of inclusion is, “What happens when my data is taken against my will?” Right? I’m an artist, I have a style that I’ve spent years of my life perfecting, and now somebody’s taking that. That’s a cost of inclusion. I’m in a surveillance lineup and I’ve been misidentified as somebody else. This happened to Portia Woodruff. She was arrested while eight months pregnant, sitting in a jail cell because of a false facial recognition match filled by AI [inaudible 00:48:36].

Jon Krohn: 00:48:36

And that just happened, right? That was-

Joy Buolamwini: 00:48:38

That’s this year.

Jon Krohn: 00:48:38

… a reporting. Yeah, that’s this year.

Joy Buolamwini: 00:48:40

This is this year when they report… And I think it’s so important, because so often I hear, “Well, you know, that research was from back in the day.” It’s not that back in the day. And even the new research that comes out on the performance of various facial recognition technologies continue to show disparities that are large relative to one another across different subpopulations, demographic groups and their intersections. So even that is not a full argument. The other part though that we see time and time again is the cutting edge of the research isn’t once integrated in the products that are being adopted by older institutions or more bureaucratic institutions. So it could be the case that algorithms developed in 2015 are what’s powering what’s leading to someone’s false arrest in 2023. It could also be the algorithm developed yesterday, right? I don’t want to just make it a dated situation, but I think we can give ourselves a false sense of progress if we don’t consider product lifecycles, in that not everything gets updated.

00:49:53

I think about it like a car recall. Yeah, now there’s an issue with the tires, but it doesn’t mean all the tires get replaced all at once, which you would think because it’s tech, it would be easier to do, but you still have to think about the contracts. You have to, now you go into the people processes, which I learned a lot more about as I got further into the Algorithmic Justice League. So what seemed like could maybe be straightforward tech solutions, I quickly learned were social technical problems where you couldn’t only think about the technical aspect of it, which is personally where I like to be. I got into computer science so I wouldn’t have to deal with messy humans. Here we are. Here, it clearly didn’t work out. But I could, like, “Ooh, nice math. People are out of it.” But that’s not how it works. Even you can lie with statistics, as we all know. So it’s not as neutral and objective as I would have hoped for it to be. Meaning we still have to do that hard work of self-development and reflection as well as societal development and reflection.

Jon Krohn: 00:51:06

Yeah. I recently did an episode number 703 with Professor Chris Wiggins of Columbia University. He’s also the Chief Data Scientist at the New York Times, and he did an episode on the history of data science. And it’s his position that everyone in this field should also have some social sciences education because of how the algorithms that we deploy all have an impact on the real world, on society, that we can’t keep these things apart. On the note of your research, so we’ve already talked about how in your master’s and doctoral thesis from MIT, they involved concepts of the coded gaze, gender shades, we’ve talked about those. And have we talked about gender shades? Actually, we haven’t done that yet, have we? We talked about shades.

Joy Buolamwini: 00:51:55

A little bit, in so much as you mentioned that the research showed bias in products from IBM, Amazon, Microsoft etc.

Jon Krohn: 00:52:04

Yeah. So maybe let’s dig into that a bit more. So, there’s things like the Pilot Parliaments Benchmark, the PPB, and it’s your position that it’s essential for AI developers to take an intersectional approach to analyzing data. So yeah, do you want to fill us in on… I’ve just opened a bunch of cans of worms here, but gender shades, Pilot Parliaments Benchmark, and yeah, intersectional approaches to analyzing data. I’m sure our viewers would also love to hear about it.

Joy Buolamwini: 00:52:38

Yes. Absolutely. So, the reason I even came to intersectionality was because I started reading outside of computer science and realized that there was a lot to learn. And so, in graduate school, I was introduced to research on anti-discrimination law from Kimberly Crenshaw. And what I found with her research, is she found the limits of single axis analysis when it came to looking at discrimination through US law. So for example, if you have a law that says you can’t discriminate on the basis of race, or you have a law that says you can’t discriminate on the basis of gender, she was finding that people at the intersection of gender and race on a particular issue were running into problems. For example, let’s say you’re at a company and the company hires Black men and you’re a Black woman. So if you say, “I’m being discriminated against.” They will say, “We hire Black people.” And the data would show they hire Black people, but it’s not accounting for the racial aspect of it.

00:53:50

Same for Asian women. They can be different intersections. And so as I was looking at this research she did, when it came to anti-discrimination law, I thought, “Huh, what would happen if we looked at the intersections, if we weren’t just doing single axes analysis?” So if I looked at demographics, so gender and phenotype, skin type in this case, would there be a difference? Would the story vary with a sharper lens? And I didn’t know the answer. That was part of the research. And after we ran all the studies, et cetera, did our fun experiments, we found that, oh my goodness, if we look by gender and we look by race alone, yes, there are disparities.

00:54:39

So I think it was probably 12 to 18%, depending. When we looked at the intersection, we would see a case, for example, where the gap could be as large as around 34% difference. If you compare, let’s say lighter males and darker females with one of the companies. And we didn’t do all of the intersections, we didn’t look at age, et cetera, we didn’t do all of the world. The Pilot Parliaments Benchmark is a data set I created because when I looked at existing data sets, they were largely pale male, or the ones that were more gender balanced because they were meant to assess gender classification, still didn’t contain that many dark-skinned people. So I was surprised to find that by pure numbers alone, my smaller dataset actually represented more darker-skinned individuals than some of the larger data sets that were out there.

00:55:44

And so the Pilot Parliaments Benchmark became necessary because I realized the tests we were using to see how well systems performed didn’t reflect society. So we were getting a false sense of progress. And this false sense of progress is being used to justify the adoption of various facial recognition technologies. And so I realized, as I started, I go from Conan, the white mask, doing this class project, and you start peeling the layers. So this layer made me not just think about facial recognition technologies, but any machine learning approach, how are we evaluating it? The way we’re evaluating it, if it doesn’t include an intersectional lens, means we’re not seeing the complete picture. And so this aggregate level view, which if you’re wanting to compare various algorithms, you want to know how it did overall. Okay, let’s say 95% of your benchmark is lighter-skinned individuals.

00:56:51

You could fail on all darker-skinned folks and still get a passing grade. So it was something akin to that we were seeing it with the research I was doing with the Gender Shades project. What happens when the test represents more of the real world? Let’s see. And that was what we did. So that’s what was my MIT master’s thesis. Let’s make a test that’s more intersectional and see how these companies perform. In that case, binary classification task of gender, not because gender is a binary, but because these were the categories that were being used. And so I was really concerned that on a test where you had a 50/50 shot of getting it right, we were seeing these types of disparities.

00:57:45

So my informed assumption was that if you were moving to something like facial identification, one to many matching, you were going to likely see even worse numbers. And that’s what happened on what we saw when the National Institute for Standards and Technology, taking inspiration from my master’s thesis, which was jarring for me. I was like, “Wait, they’re reading… Read the published papers, cite that one.” A little more rigor, but regardless, to do a more comprehensive test where they have 17 million photos as opposed to the 1,270 images I collected. But I do think something else I take from conducting the Gender Shades research is so often scale is seen as a necessary part of making impact. And I point out the fact that the Pilot Parliaments Benchmark was small enough to be collected by a grad student with little to no resources.

00:58:59

Small enough where I could hand label all of it. And so could Deb. When Deborah Raji, great scholar, wanted to intern with me at the media lab and most other people on the tech side, one of the things you have to do is hand label all of the data sets because then you also become the arbiter of truth. And you see how precarious, how shaky your ground truth is. So I remember when companies would have their internal teams replicating Gender Shades, and I’d get a call or a message about specific faces that they’re like, “So how did you label this one?” But I thought those cases were particularly interesting because they reveal the most about our assumptions.

00:59:51

For example, with skin type, in some places people bleach their skin. And so then there’s a question of, okay, the skin color is this way, but what is the skin type? The other thing I noticed is people from South Asia or South Asian background, it was almost like people would use other metadata to determine the skin type. So it wasn’t just what they saw on the skin. It’s like, oh, is it from an African nation? Is it from a different… And also, just because you’re in an African nation, for example, we did South Africa. You know a little something about colonialism. There’s an over representation of lighter skinned individuals in the South African Parliament. And it was also from a research perspective, an interesting way to answer the question, okay, are these differences an artifact of just the photo sets themselves? So it was nice to have that set of photos for that reason as well.

01:00:56

But we saw those disparities there, and within that, you could see the skin type range was more diverse within an African country. So then when I would look at data sets or analyses coming from an organization like the National Institute for Standards and Technology, what you would see are experiments where they would say this algorithm performed in this way on this population, and it would be at a nation level population. Which, depending on the nation, I don’t know. So let’s say Brazil, so much diversity. But now you have to think about just like when I was telling you about the face detectors failing on darker skin and that already having to deal with the various power shadows.

01:01:54

So now when you think about these data sets, we’re doing visa images. Who in the country is going to have the resources to be able to apply and obtain a visa image in the first place? So then I was realizing the proxies we were using aren’t actually going to necessarily give us a sense of a heterogeneous population in the first place. So the whole intersectional piece really came from observing what was wrong, because as a graduate student, I’m reading about all these breakthroughs, yet here I am coding a white mask. So something’s off. And following that line of questioning, I will say, again, I wasn’t completely discouraged from doing the research outright. Maybe a few people are like, “How much you know about math? Are you sure you want to get into AI, really, really?” But that didn’t deter me. And it was helpful, again, to have mentors and friend like Dr. Timnit Gebru. But the point I’m trying to make is, sometimes you can see an issue that feels apparent or you have an intuition, you still need to get the data. You can’t just say, I assume this, and now it’s my opinion is fact. You have to go be a scientist, have a hypothesis, test it out, do that whole process and see, but don’t be discouraged if not everybody around you sees it at first.

Jon Krohn: 01:03:32

Nice. A great message there. And yeah, so this Gender Shades concept, to summarize it, is that when we have a statistical model or machine learning model, it’s like Statistics 101, where you learn that you can have a main effect, or an interaction term. And so in this case, this binary classifier, you’re predicting gender, it’s a task that you happen to have, there were a bunch of different APIs that existed at that time for big tech companies that did this task. So with that as the outcome, gender is the outcome that you’re predicting. You have, going into the model with each face, there is a label of what the gender is. There’s a label of the shade, the skin tone and-

Joy Buolamwini: 01:04:22

Skin type, skin type.

Jon Krohn: 01:04:24

Skin type, ah, skin type, damn. My own notes. Yes, the skin type, of course. So you have these two features going into the model, and if you looked at skin type alone or gender alone, you noticed issues. And so for example, one of the stats that I remember from that 34% stat you were giving on error rates. I don’t know why I’m visualizing this so easily, but I remember that for pale males, it was a 100% accurate on predicting gender. But with Black males, it was 99% accurate. But you’re like, okay, that’s worse. It doesn’t seem that bad. But when you look at dark-skinned women, that was the 34% wrong, that was the 77% stat.

Joy Buolamwini: 01:05:18

Also, that’s still an aggregate. So if you really get into the research paper and you look at the supplement material, in the paper we aggregated skin type one, two, and three as lighter and skin type four, five and six as darker. When we broke it all the way down, if you went to skin type six for women, you had error rates more around the 47%, closer to a coin-

Jon Krohn: 01:05:46

That’s just a coin flip.

Joy Buolamwini: 01:05:47

Right?

Jon Krohn: 01:05:47

That’s crazy.

Joy Buolamwini: 01:05:51

And then the other thing, just on the methodology piece, the way I calculated it was you didn’t get points off on the faces you didn’t detect. So some of the reasons why you saw that darker male performance was better in some instances was because I wasn’t… Again, I was kind of the nice teacher. I’ll aggregate, I’m not going to count you off for the faces you didn’t detect the way that I did it in my master’s thesis. So the methodology for the master’s thesis is slightly different from the Gender Shades published one in terms of what you get points for and what you don’t get points for. So if you’re really looking into the numbers and you want to see it, you can check out gs.ajl.org, and we have this cool visualization, so you can filter it by the predicted gender, by the skin type, et cetera. I’m not sure if you see this website, gs.ajl.org.

Jon Krohn: 01:06:54

Yes, you’ve shared with me, and I’ll be sure to include it in the show notes as well for all of our listeners.

Joy Buolamwini: 01:07:01

So if people want to play around with it and see how it breaks down, et cetera, what people predicted, and by people I mean APIs from different companies, you can see that and you can see their country, you can see their skin type, you can see the gender, et cetera, and all of that good jazz for yourself. We decided not to release the photos of the actual parliament members, especially with the passage of GDPR. And so at the time we were collecting the dataset, GDPR wasn’t in effect, but when we decided we would want to make this data available, we decided to release the metadata and not the original faces of the people in the dataset, given where we are in our understanding and also where the laws are at this point.

Jon Krohn: 01:07:59

Yeah. So to make that crystal clear for our listeners, this Pilot Parliaments Benchmark, PPB, that you created to allow people to, or at least yourself I guess, after not having released it publicly-

Joy Buolamwini: 01:08:13

Or researchers did reach out for it, and yeah.

Jon Krohn: 01:08:17

So the PPB allows folks to take this intersectional approach to analyzing data. And the name of that comes from, I’m piecing this together now, but the parliaments part is that you were hand labeling photos of people from parliaments all over the world, people who have been elected to government, and then it’s pilot because it’s a relatively small dataset.

Joy Buolamwini: 01:08:42

1,270. This should not be your gold standard benchmark by any stretch of the imagination. The reason I was looking at parliaments was I wanted to find a dataset where I could get better gender balance. And so I went to the website of UN Women, United Nations Women’s Portal, and they had a list of countries by their representation of women in parliaments in those countries. In the top 10, you had three African nations, you had South Africa, you had Senegal, and you had Rwanda. And in the top 10 also you had Nordic nations, so Iceland, Sweden, Finland. There were Caribbean nations and so forth. And the reason I decided to focus on the Nordic way, up from the equator, and the African ones was to have a better distribution of opposite ends of the skin type. So remember I told you about Fitzpatrick, where once upon time it was just category four, rest of the world, global majority. And then that got expanded.

01:10:05

I found it was really difficult to get labeling consistency for type four, which would be more so what was represented in more of the Latin American countries that also made it into the top four in the Caribbean nations for the representation of women in parliament. At that time, I was looking at the data. So the reason we ended up with parliaments was I was trying to find a place where I could have more of that gender balance. I looked at the US, we were so far from the top 10, it wasn’t even going to be included at all. But as I was doing parliaments, I looked at a lot of parliaments from all over the place. Singapore, looking at India, so many different parts of the world, and it just underscored to me how much power men hold. You definitely saw that gender imbalance time and time again. So that’s part of how that came about. As an athlete, I used to be… Also back to Oxford days. I used to be with the Oxford Blues pole-vaulting of all things.

Jon Krohn: 01:11:23

Oh, yeah? [inaudible 01:11:27].

Joy Buolamwini: 01:11:26

So something you might not have known or it’s probably out on the internet, a little pole vault.

Jon Krohn: 01:11:31

That actually is news to me. I didn’t know that. That’s cool.

Joy Buolamwini: 01:11:34

Yeah, so some pole-vaulting aspirations. Didn’t make it to the Olympics. I went to grad school. Here we are.

Jon Krohn: 01:11:43

Well certainly, I mean in almost all likelihood, making a bigger impact on this trajectory globally. And speaking of impact, how did companies like IBM respond to the findings of their APIs, say their gender classification API, working so poorly on particularly women with darker colored skin?

Joy Buolamwini: 01:12:09

Yeah, so I see the response to the gender stage research as a tale of three companies. Let’s start with IBM. IBM, when I sent the information, and when I sent the information to all of the companies, I didn’t tell them who their competitors were, just that there were companies they likely had heard of. So it was what I now call a coordinated bias disclosure, which builds on coordinated vulnerability disclosure from InfoSec. And so if you find a vulnerability, instead of making it public to everybody at once, you let the companies know ahead of time, you give them an opportunity to address it, and then when you announce it, if changes have been made, you can also announce those changes. So we did that with all of the companies, but IBM was really the only one that took us up on that offer.

01:13:09

So I remember, I think shortly after my 28th birthday, going to one of the IBM offices in New York, and then they had one in Boston. So I was literally running their updated algorithm, their model really, on the Pilot Parliaments Benchmark in my Lego-clad office at MIT. I’m just laughing because they were very much dressed in a IBM way, and then you have grad students all around, but it’s not just any grad students, it’s grad students at the media lab, of all places. So I still remember that. So I would say IBM engaged, they said, “Okay, we receive your research, talk to our teams.” They were, I believe, already working on a new model. And so it timed out. The timing came to where I could say, “Hey, there’s a different model.” And they closed the disparity substantially between the first test and the second test. Change is possible for all the people who are telling me, “Well, the laws of physics, there are limitations, et cetera.” I was like, “There are physical limitations. That was not the problem here.”

01:14:24

It was more of a question of priority. I will say though, after that happened, I then read an Intercept article about IBM having worked with the New York Police Department to provide computer vision tools that allowed them to search for people by their skin color and facial hair, things like that. So essentially tools for racial profiling. So this was the thing where I’m thinking, “Oh, cost of inclusion.” Because you don’t necessarily want to be powering a surveillance state. And so there was that aspect of it. I do have to commend IBM, in 2020, they were the first company to say, “We are no longer going to sell facial recognition to law enforcement.” Microsoft followed saying, “We won’t sell it to law enforcement until there are laws.” So that was the tale of IBM, I would say engaged and proactive.

01:15:27

Microsoft. Microsoft, I gave them the same opportunity I gave IBM, they slept on it until there was a New York Times feature article, and then we started to hear from Microsoft. So Microsoft also took more of the technical route in terms of saying, “Okay, here we’ve updated our models, et cetera.” I remember when Microsoft released an update saying that they had changed their models, et cetera. It was the week I was on, I think Tech Review 35 Under 35. So I uploaded my Tech Review 35 Under 35. I have a YouTube of this, it’s probably offline, but I like to timestamp these things in case I’m ever questioned. I’m like, okay, you’re right, you right. Here it is. Okay, so I upload my 35 Under 35. I’m misgendered. My age is all kinds of off, and it’s the day that they released that announcement. And so that’s when I started thinking about this car recall analogy. Just because you’ve announced there’s an improvement, doesn’t mean it’s been integrated into the products. Or even if there is an improvement, doesn’t mean it works for all people. So I will say Microsoft did respond more with the research lens and then with the public pressure in 2020 also backed away. So those are two companies, responsive.

01:17:01

Then there’s Amazon. I think Amazon’s an example of what not to do. Amazon, we didn’t even test Amazon the first time. Deb Raji decided she wanted to do an internship with me, et cetera. We figured something out. And so for that internship, we decided to do the gender shade study again, but include two more companies. So we included Clarify, and Amazon. I assumed, given that basically the test answers were available and had been available for over a year, these companies would crush it. So I really was just expecting it to be pretty much a blowout. This is not a particularly challenging data set. Easy grader, nice teacher.

01:17:51

And so I was surprised to see Amazon was where the worst companies were a year prior, even after all of this attention, media, et cetera. So one, we didn’t show anything that was unique to Amazon. We said like their peers, they also have up, gender bias. Up, skin type bias. And yes, that intersectional bias, also there. We showed all of that. It was the same story, more or less, just with a different company, that given their resources and given that they were selling facial recognition to law enforcement, and there had been petitions to them to stop, I would’ve thought there would’ve been a bit more consideration.

01:18:38

So Amazon’s initial approach was actually to attempt to discredit the research. And I remember I was speaking, this is all in the book, we can go way deep into it. So it’s that chapter Poet versus Goliath, so you can get all the nitty-gritty in there. Long story short, they attempted to discredit the research that ended up not working, and they took the long way around, but eventually also came to that we will not sell facial recognition to law enforcement until further notice. These company commitments are, I think, a step in the right direction. But we can’t rely on self-regulation or voluntary commitments for safeguarding the rest of us from AI harms. So I would say that the response was mixed, but I will also say the response from other companies and also other entrepreneurs was this development of an AI auditing ecosystem. So you have many more companies that offer services to, say, if you want to build trustworthy AI or responsible AI or beneficial AI, it keeps changing, but not bad AI. Fill in your not bad version. We can help you test the systems, test your processes, et cetera.

Jon Krohn: 01:20:09

Yeah. So what’s your take on the future? What’s your perspective on what the future of AI will be like? Will algorithms be increasingly ethical? Are we going to figure these issues out? Are people doing the right things out there?

Joy Buolamwini: 01:20:21

I think we’re going to have a diverse future. And so, depending on where in the world you live, there will be different AI regimes and different models and structures for AI governance. We’ve seen a risk-based governance approach emerging in the EU, as we see with the EU AI Act, and I was really gladdened to see that the use of facial recognition in public spaces, the live use was put on the restricted use category. So, there’s still more to do for sure, and you have places in the US where there’s an exploration of a rights-based approach, as we see with the blueprint for an AI Bill of Rights. You also have scale-based approaches. So if you have over a million users, et cetera, the requirements for you are not necessarily going to be the same as the startup just trying to make it. So I think what we’re going to have in the future, just like we have different governments around the world, are different structures for AI governance.

Jon Krohn: 01:21:31

Yeah, that’s a good answer. Yeah. I hope that with episodes like this, we get our listeners thinking about the problems that are inherent in most datasets. And because almost all of our algorithms, we want them to be deployed into the real world, they’re going to have a real world impact. And you need to be thinking about how that impact is going to affect different groups, not just… You’ve got to do these main effect analyses. You’ve got to do the intersection analyses. You can’t just be relying on the accuracy stat on the whole dataset if you don’t have a well-balanced dataset.

Joy Buolamwini: 01:22:15

Right. And there’s also that quest, can you make the well-balanced dataset? And we’ve explored it in various ways. And at the end of the day, whoever is constructing the dataset is bringing their own perspectives, their own biases. And so, I do believe bias mitigation starts with awareness. I don’t think you will eliminate bias because to do that, you would have to eliminate people and you would have to eliminate values. It’s being aware of what those biases are, correcting for the unwanted biases as well. And ultimately focusing on harms because let’s say you did create more accurate systems than are then used in abusive ways. It’s not just a question about how accurate are the tools I’m creating, but how are these tools being used in the first place?

Jon Krohn: 01:23:08

Yeah. So thank you for answering all of my questions, Joy. I really appreciate it. It’s been such an amazing episode already, but we also have some audience questions for you. So as I alluded to at the beginning of the episode, I mentioned on social media that you would be an upcoming guest on the program, and so we had Florian Feltes point out that you’re a St. Gallen Symposium alumna, but we had some questions as well. So Christina Stathopoulos, who was a guest herself actually on this show in episode number 603, and she became a friend of mine. We recorded in person, so it’s one of those nice things where you get to really meet someone. And so, yeah, Christina’s a huge fan. She’s been following your work for years and she’s beyond jealous that I get to chat with you. So she says, I already know this is going to be my favorite episode of Super Data Science ever.

Joy Buolamwini: 01:24:05

No pressure.

Jon Krohn: 01:24:07

The question for you was, I think we might’ve addressed this to a large extent in the episode, but you might have some tips by me giving you this question again, I suspect you’ll have some great guidance for listeners that hasn’t already come up. So her question is, how do we improve fairness in algorithms and AI? What are your tips and tricks for a more inclusive future with technology?

Joy Buolamwini: 01:24:30

This is a great question, and it comes down to being aware of how we’re defining what fairness means as a statistical parody. If we’re looking from a lens of equity, giving everybody the exact same thing might not necessarily address historical or cultural realities. I remember when I was working on my PhD dissertation, this notion of, what is fairness and who gets to define it, came up quite a bit. And what might be fair in one context or in one country might change in another. And so I think it’s really important to articulate the assumptions behind any processes or approaches to fairness.

01:25:23

So are we assuming, right, it’s the same kind of people with the same sorts of opportunities and everything has been a level playing field? Or are we in a different kind of context? So being aware about the assumptions of fairness and what’s animating those and whether or not those assumptions hold in the real world, because oftentimes you can do the toy problems which are helpful for intellectually exploring an idea, but sometimes that can go to such a clinical place, it doesn’t actually make sense in the context of people’s lived lives. And so I think when it comes to fairness, it’s really important that you talk to the people who might be impacted by the AI systems or ML systems you’re creating to have a better sense.

01:26:17

I think another way of coming at this, a lot of my work is around algorithmic auditing, but also thinking about a full life cycle of an AI system from design, development, deployment, oversight, and a place that is oftentimes not even considered in this conversation around fairness, redress. So what’s the fair way to respond to an algorithmic harm? Because maybe you didn’t quite get fairness right in the design, development, deployment of the system. And so I think that’s also, there have been notions of things like algorithmic reparations. I’m not sure exactly what that would look like, but in that conversation of reparations and repair, it is saying we have to address larger systemic issues that go beyond what’s happening in a particular snapshot.

01:27:13

Part of what we’re doing with the Algorithmic Justice League, with the X-Coded Experience Platform is collecting stories and experiences from people. So there is an evidentiary record. So where laws do exist or where litigators are hungry for the class action lawsuits, there are pathways for redress. And I seldom hear the conversation of redress included in conversations about fairness in AI, but I think it’s a crucial piece.

Jon Krohn: 01:27:45

Yeah. In my years hosting this show, we’ve had a number of episodes on ethics and responsible AI, and this is my first time hearing it as well. So yeah, that’s a great tidbit there on redress. And yeah, I think a really great point there as well was just your point about talking to the people that will be affected by these algorithms, really understanding what they’re like and considering the whole life cycle of the algorithm development. Our second question here comes from Kerry Benjamin So Kerry’s a data science associate and asks, after all these years with all the challenges that you’ve come across, what are the things that have kept you going?

Joy Buolamwini: 01:28:26

The youth. I’m also youthful myself, right? I remember getting a video from a seven-year old at the time named Aurora. Her mom reached out to the Algorithmic Justice League and she shared that for school project, they had to pick a historic figure. I’m like, “Look, I’m in my 30s. I don’t view myself as historic, but okay, I’ll keep reading.” And so for the class, they created a living wax museum. So she got her red blazer, she got her white glasses, and her mom sent the video. So she goes, “I’m Dr. Joy Buolamwini and I have a bunch of degrees,” which just sent my parents laughing. And then she had watched Coded Bias a number of times, she knew all of these. She had done her research. I mean I think maybe Super Data Science is the closest to matching the level of research she had done on me and the Algorithmic Justice League. And also just working with organizations like INCO Justice led by high schoolers and the energy, the eagerness, the readiness to build a better society for themselves and for us, that always renews my energy when I’m feeling a little bit depleted.

01:29:57

Not so long ago, I had the opportunity to go to Venice for the DVF Awards, Diane von Fürstenberg, the fashionista, the inventor of the wrap dress. And in her 70s, she’s using her time and resources to uplift women leaders in a very powerful way. And so to be honored alongside the Deputy Secretary General of the United States, Amina J. Mohammed, Amal Clooney, and all of the powerful work she’s done as a human rights activist, storyteller- comedian, Lilly Singh, Helena, who’s a youth activist, where they’ve been working in Ecuador to actually have the government stop the deforestation of the Amazon just leaves me incredibly inspired to know that there are fellow sister warriors out there, sibling warriors, any gender, all genders welcome, really pushing to make change in many different areas. And so being in community, taking the time to celebrate the accomplishments, even though there’s so much more to be done, it was a good reminder, the DVF awards.

01:31:15

And at AJL, we have something called the Gender Shades Award, which we give to an X-coded person because oftentimes researchers like myself are celebrated, or you’ll have filmmakers like Shalini who was the director for Coded Bias, an Emmy-nominated film, but we wanted to celebrate the people who are being harmed by AI and are nonetheless using their voices, using their platform to speak out. And so the first Gender Shades Justice Award went to Robert Williams, who was falsely arrested in front of his wife and two young daughters, held in prison for 30 years, and he has been speaking up about it. And so, just like the DVF Awards, it came with an award and some money as well to help that can be used as they see fit. But those types of experiences and the people on the front lines, like Robert Williams, like Porcha Woodruff, the students who are inspired like Aurora, they definitely keep me motivated.

Jon Krohn: 01:32:23

Nice. Those were all great anecdotes and for people watching the YouTube version, they can see the DVF Award right over Joy’s right shoulder on the shelf behind her. And so last question here from Dr. John “Boz” Handy Bosma, who is at the IBM Academy of Technology Leadership. He’s a senior IT architect there. He says, it’s a bit of a long question here, but it’s around the idea of what are your thoughts, Joy, on turning nascent movements in AI toward the goal of ending systemic racism with specific reference to whether or how AI might be turned to the goal of closing racial, ethnic, and gender wealth gaps globally? So yeah, we got a taste of it there with your last response. Some awards, individual awards, but yeah. Yeah, this is obviously a broader question.

Joy Buolamwini: 01:33:27