How do Neural Networks Work?

(For the PPT of this lecture Click Here)

Having already looked at the neuron and the activation function, in this tutorial the deep learning begins on how Neural Networks work.

If you have forgotten the structural elements or functionality of Neural Networks, you can always scroll back through the previous articles.

Off the Page and Into Practice

This will be a step-by-step examination of how Neural Networks can be applied by using a real-world example. Property valuations.

The pinnacle of your adult life. The moment you realize the time falling asleep on the couch while watching South Park reruns is finally coming to an end.

You are getting on the property ladder.

Choices, Choices

Almost everybody wants to own a house, but I can’t think of anybody who enjoys buying one. Why is that?

Well, it’s time-consuming, boring, and every ill-considered choice can take you a step closer to long-term financial bondage. It’s so stressful. Every option becomes an imperative you must painstakingly consider, and objectively at that. It’s an almost puzzle to solve.

Lucky for us we have Neural Networks. They can find answers quicker than we can and without the hair-tugging vacillation we would likely go through on the way.

Remember

One thing to remember before we get into this example. In this section, we will not be training the network. Training is a very important part of Neural Networking but don’t stress, we will be looking at this later on when we better understand how Neural Networks learn. This part is all about application, so we will imagine our Neural Network is already trained up, primed and ready to go.

Back to the task at hand

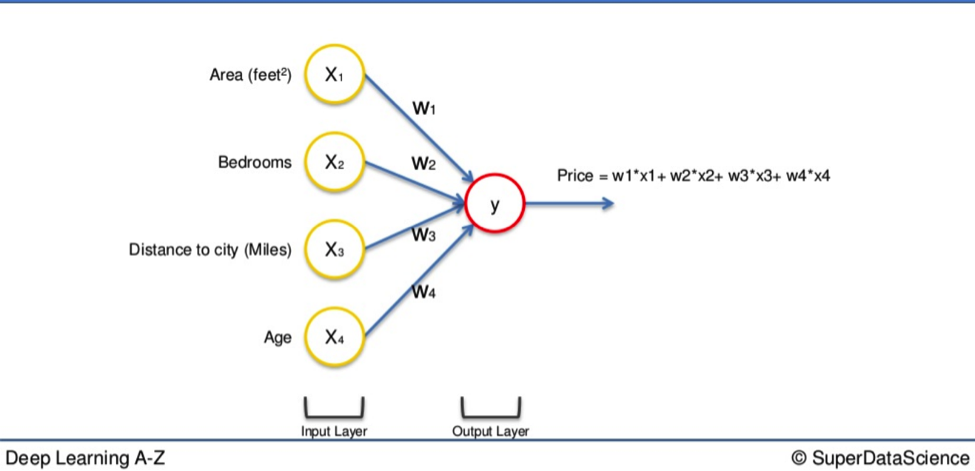

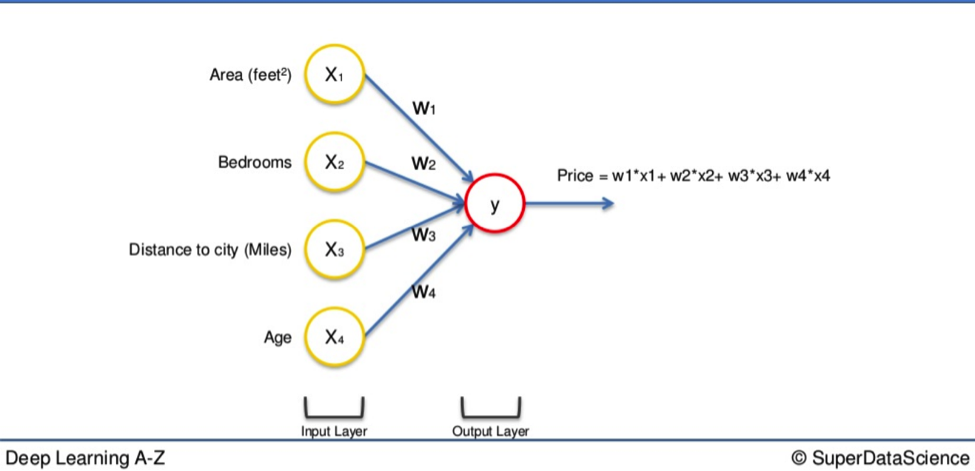

As you will remember, we always begin with a layer of input variables. These are different factors assembled in a single row of data, represented below on the left-hand side.

In this simple example we have four variables:

- Area (feet sq)

- Number of bedrooms

- Distance to city (miles)

- Age of property

Their values go through the weighted synapses straight over to the output layer. All four will be analyzed, an activation function will be applied, and the results will be produced.

This is comprehensive enough on a basic level. But there is a way to amplify the power of the Neural Network and increase its accuracy by a very simple addition to the system.

Power Up

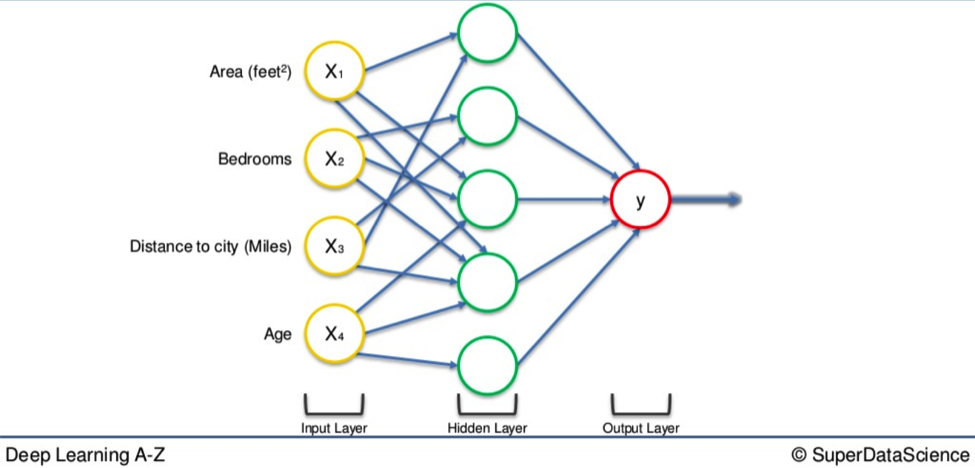

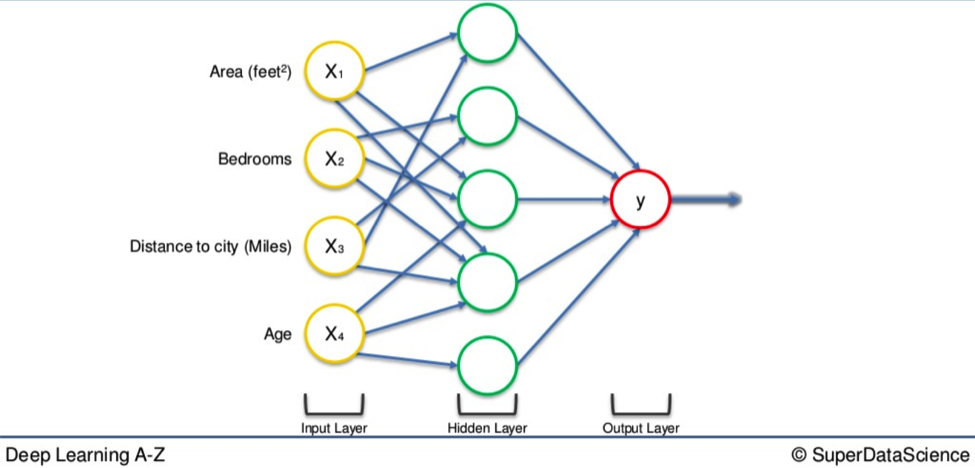

You can implement a hidden layer that sits between the input and output layers.

From this new cobweb of arrows, representing the synapses, you begin to understand how these factors, in differing combinations, cast a wider net of possibilities. The result is a far more detailed composite picture of what you are looking for.

Let’s go step-by-step

We begin with the four variables on the left and the top neuron of the hidden layer in the middle.

All four variable all be connected to the neuron by synapses.

However, not all of the synapses are weighted. They will either have a 0 value or non 0 value.

The former indicates importance while the latter means they will be discarded.

For instance, the Area and Distance variable may be valued as non 0. Which means they are weighted. This means they matter. The other two variables, Bedrooms and Age, aren’t weighted and so are not considered by that first neuron. Got it? Good.

You may wonder why that first neuron is only considering two of the four variables.

In this case, it is common on the property market that larger homes become cheaper the further they are from the city.

That’s a basic fact. So what this neuron may be doing is looking specifically for properties that are large but are not so far from the city.

Properties that, for their proximity to a metropolis, have anomalous amounts of square footage. The benefits of this are clear. A person may have a large family but works and whose children go to school in the city. It would be beneficial for everybody to have their own space at home and also not to have to wake up when the cock crows every morning.

This is Speculation

We have not yet done deep learning on training Neural Networks. Based on the variables at hand this is an educated guess as to how the neuron is processing these variables.

Once the Distance and Area criteria have been met, the neuron applies an activation function and makes its own calculations. These two variables will then contribute to the price in the final output layer.

This is where the power of the Neural Network comes from. There are many of these neurons, each making similar calculations with different combinations of these variables.

The next neuron down may have weighted synapses for Area, Bedroom and Age. It may have been trained up in a specific area or city where there is a high number of families but where many of the properties are new. New properties are often more expensive than old ones.

If you have a new property with three or four bedrooms and large square footage, you can see how the neuron has identified the value of such a place, regardless of its distance to the city.

The way these neurons work and interact means the network itself is extremely flexible, allowing it to look for specific things and therefore make a comprehensive search for whatever it is they have been trained to identify.

That was a simple example of a Neural network in action. In the next tutorial, deep learning on how Neural Networks learn will commence.