Backpropagation

(For the PPT of this lecture Click Here)

Unfortunately friends, this is our final deep learning tutorial.

But fear not, before the tears begin to flow too freely, you can stem the tide with some lovely, soft Backpropagation.

Adjusting

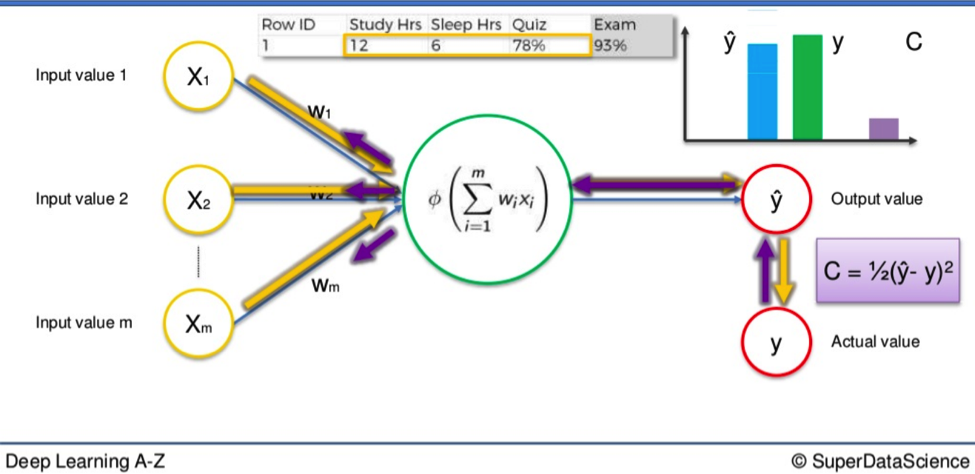

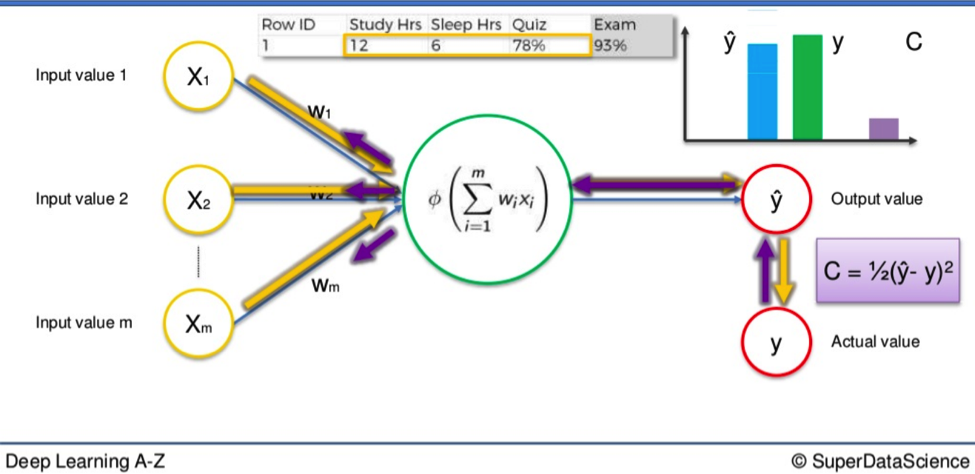

Backpropagation is an advanced algorithm, driven by sophisticated mathematics, which allows us to adjust all the weights in our Neural Network.

This is important because it is through the manipulation of weights that we bring the output value (the value produced by our Neural Network) and the actual value closer together.

These two values need to be as close as possible.

If they are far apart we use Backpropagation to run the data in reverse through the Neural Network. The weights get adjusted. Consequently, we are brought closer to the actual value.

In a Nutshell

The key underlying principle of Backpropagation is that the structure of the algorithm allows for large numbers of weights to be adjusted simultaneously.

This drastically speeds up the process and is a key ingredient as to why Neural Networks are able to function as well as they do.

Additional Reading

I mentioned this in the last post but I will include some additional reading material in case you want to get into some extra deep learning on this process. Neural Networks and Deep Learning by Michael Nielsen (2015) is all you will need to go full Einstein on this subject. Give it a look.

Several times throughout this course I have mentioned the importance of training your Neural Network. I wanted you to see the Networks in action before examining how they operate, I have left this until last.

A step-by-step training guide for your Neural Network

- Randomly initialise the weights to small numbers close to 0 (but not 0).

- Input the first observation. One feature per input node.

- Forward-Propagation. From left to right the neurons are activated and the output value is produced.

- Compare output value to actual value. Measure the difference between the two; the generated error.

- From right to left the generated error is back-propagated and the weights adjusted accordingly. The learning rate of the Network is dependent on how much you adjust the weights.

- Repeat steps 1-5 and either adjust the weights after each observation (Reinforcement learning), or after a batch of observations (Batch learning).

- When the whole training set passes through the Neural Network, that makes an epoch. Redo more epochs.

And that is that. I hope this deep learning experience has been somewhat useful to you. I hope you come back soon.