The Neuron

(For the PPT of this lecture Click Here)

In this deep learning tutorial we are going to examine the Neuron in Neural Networking. Briefly, we will cover:

- What it is

- What it does

- Where it fits in the Neural Network

- Why it is important

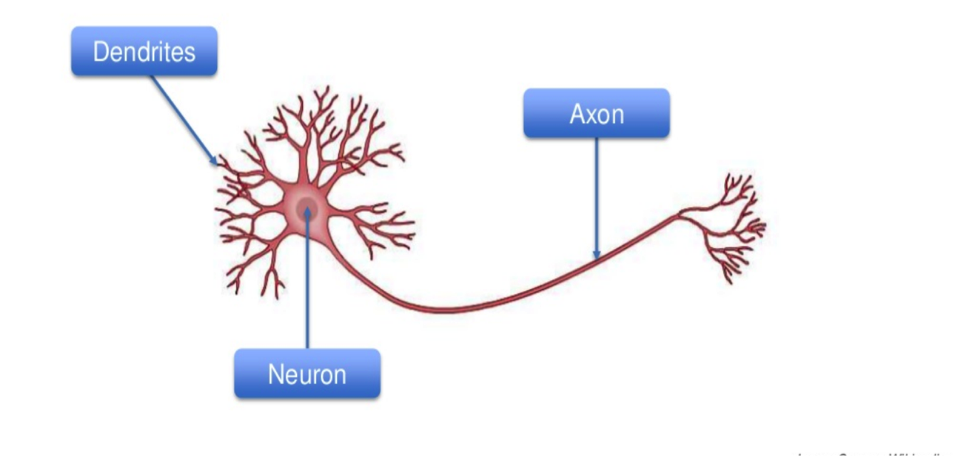

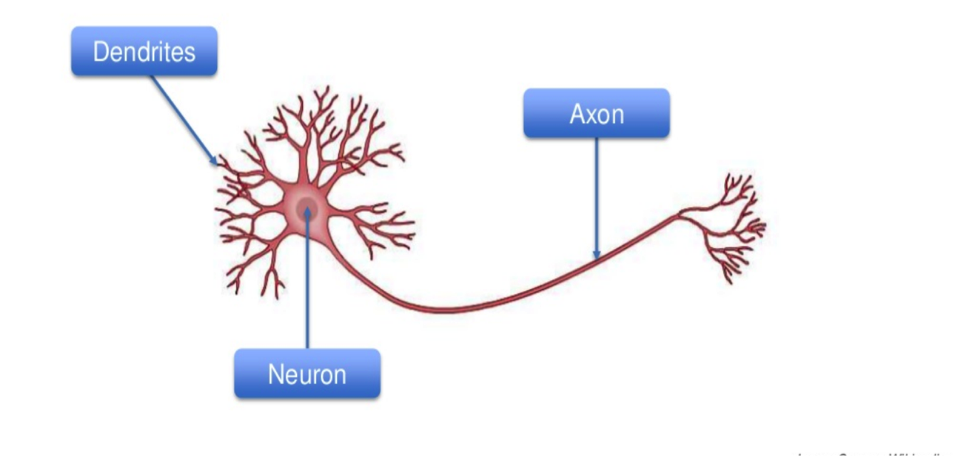

The neuron that forms the basis of all Neural Networks is an imitation of what has been observed in the human brain.

This odd pink critter is just one of the thousands swimming around inside our brains.

Its eyeless head is the neuron. It is connected to other neurons by those tentacles around it called dendrites and by the tails, which are called axons. Through these flow the electrical signals that form our perception of the world around us.

Strangely enough, at the moment a signal is passed between an axon and dendrite, the two don’t actually touch. A gap exists between them. To continue its journey, the signal must act like a stuntman jumping across a deep canyon on a dirtbike. This jump process of the signal passing is called the synapse.

For simplicity’s sake, this is the term I will also use when referring to the passing of signals in our Neural Networks.

How has the biological neuron been reimagined?

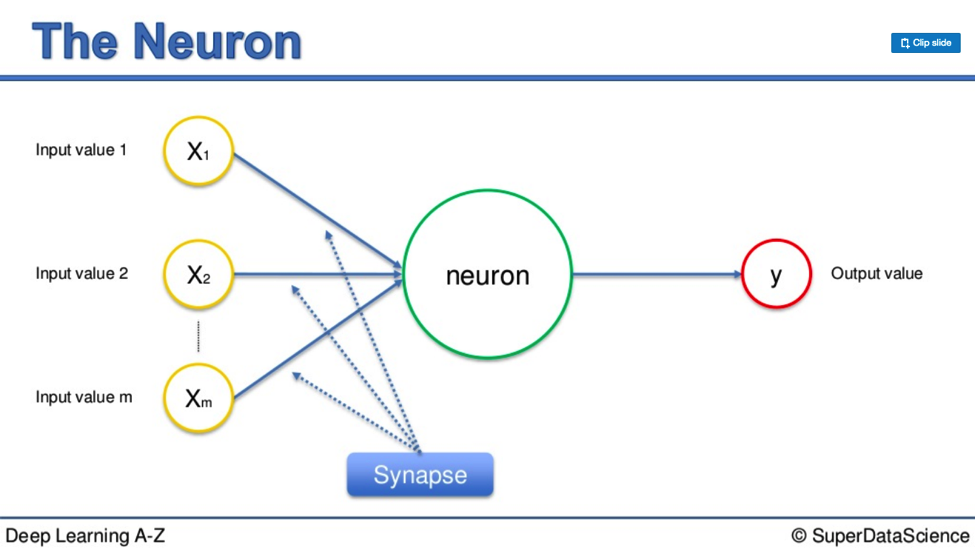

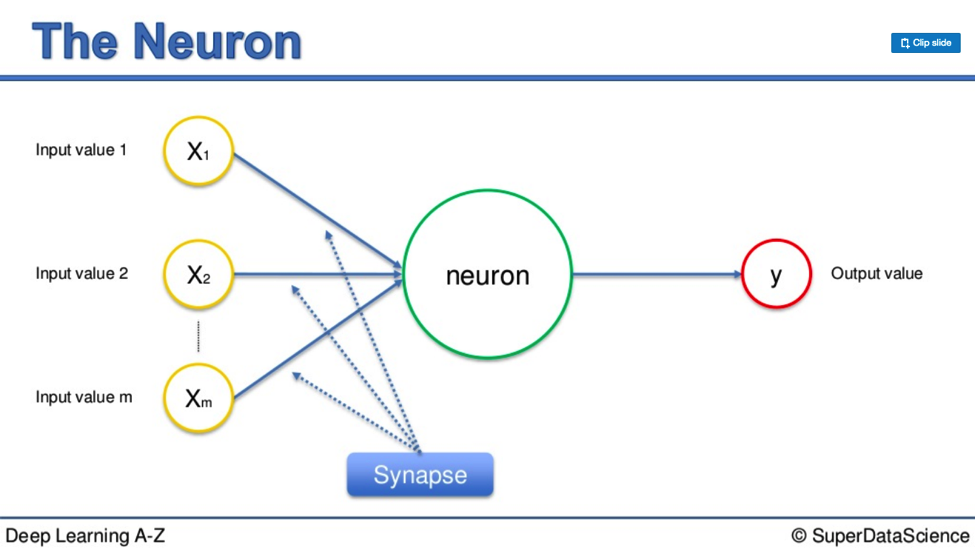

Here is a diagram expressing the form a neuron takes in a Neural Network.

The inputs on the left side represent the incoming signals to the main neuron in the middle. In a human neuron, this these would include smell or touch.

In your Neural Network these inputs are independent variables. They travel down the synapses, go through the big grey circle, then emerge the other side as output values. It is a like-for-like process, for the most part.

The main difference between the biological process and its artificial counterpart is the level of control you exert over the input values; the independent variables on the left-hand side.

You cannot decide how badly something stinks, whether a screeching sound pierces your ears, or how slippery your controller gets after losing, yet again, on FIFA 18.

You can determine what variables will enter your Neural Network

It is important to remember; you must either standardize the values of your independent variables or normalize them. These processes keep your variables within a similar range so it is easier for your Neural Network to process them. This is essential for the operational capacity of your Neural Network.

Observations

It is equally important to note that each variable does not stand alone. They are together as a singular observation.

For example, you may list a person’s height, age, and weight. These are three different descriptors, but they pertain to one individual person.

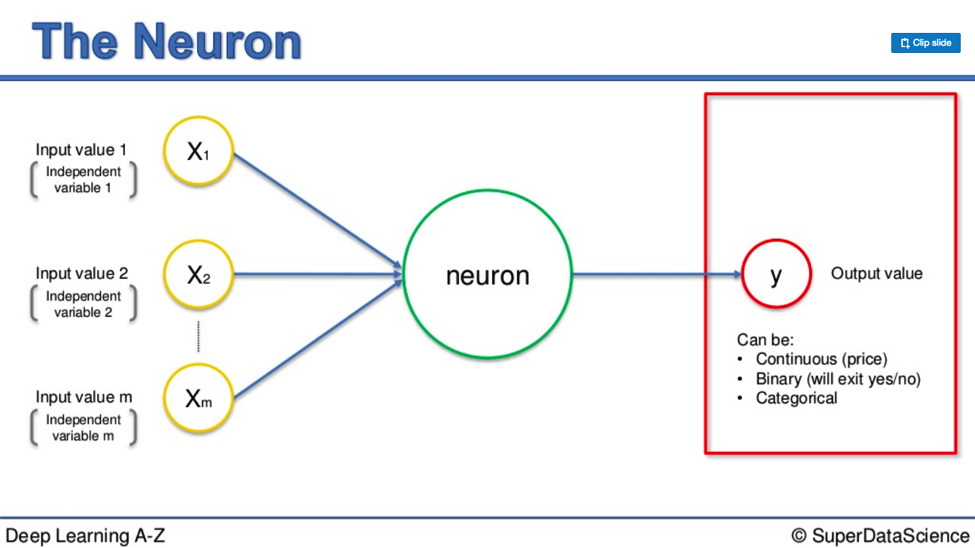

Now, once these values pass through the main neuron and break on through to the other side, they become output values.

Output values

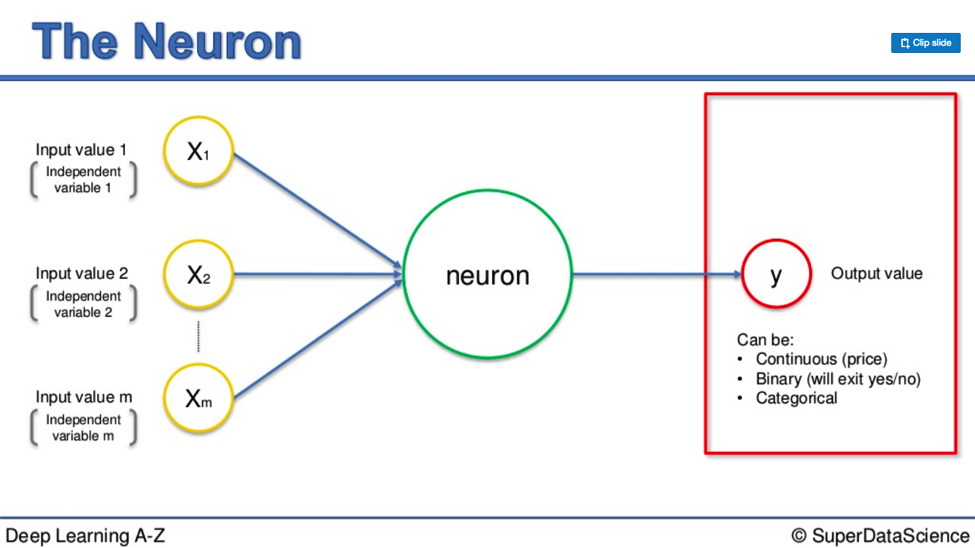

Output values can take different forms. Take a look at this diagram:

They can either be:

- continuous (price)

- binary (yes or no)

- or categorical.

A categorical output will fan out into multiple variables. However, just as the input variables are different parts of a whole, the same goes for a categorical output. Picture it like a bobsled team: multiple entities packed inside one vehicle.

Singular Observations

It is important to remember so you can keep things clear in your mind when working through this; both the inputs on the left and the outputs on the right are single observations.

The neuron is sandwiched between two single rows of data. There may be three input variables and one output. It doesn’t matter. They are two single corresponding rows of data. One for one.

Back to the Stuntman

If he’s marauding over the soft pink terrain of the human brain he will eventually reach the canyon we mentioned before. He needs to jump it.

Perhaps there is a crowd of beautiful women and a stockpile of booze on the other side. He can hear ZZ Top blasting from unseen speakers.

He needs to get over there. In the brain, he has to take the leap. He would much rather face this dilemma in a Neural Network, where he doesn’t need the bike to reach his nirvana. Here, he has a tightrope linking him to the promised land.

This is the synapse.

Weights

Each synapse is assigned a weight. Just as the tautness of the tightrope is integral to the stuntman’s survival, so is the weight assigned to each synapse for the signal that passes along it. Weights are a pivotal factor in a Neural Network’s functioning.

Weights are how Neural Networks learn

Based on each weight, the Neural Network decides what information is important, and what isn’t.

The weight determines which signals get passed along or not, or to what extent a signal gets passed along. The weights are what you will adjust through the process of learning. When you are training your Neural Network, not unlike with your body, the work is done with weights. Later on I will cover Gradient Descent and Backpropagation.

These are concepts that apply to the alteration of weights, the hows, and whys; what works best.

That’s everything to do with what goes into the neuron, what comes out, and by what means.

What happens inside the neuron?

How are input signals altered in the neuron so they come out the other side as output signals? I’m sad to say it’s slightly less adventurous than a tiny stuntman taking risks on his travels to who-knows-where.

It all comes down to plain old addition.

First, the neuron takes all the weights it has received and adds them all up. Simple.

It then applies an activation function that has already been applied to either the neuron itslf, or an entire layer of neurons. I will go into deeper learning on the activation function later on. For now, all you need to know is that this function facilitates whether a signal gets passed on or not.

That signal goes on to the next neuron down the line then the next, so on and so forth. That’s it.

In Conclusion

We have covered:

- Input Values

- Weights

- Synapses

- The Neuron

- The Activation Function

- Output Values

This is the process you will see repeated again and again and again. . . all the way down the line, hundreds or thousands of times depending on the size of your Neural Network and how many neurons are within it.

Additional Reading

For deeper learning on Artificial Neural Networks the Neuron you can read a paper titled Efficient BackProp by Yan LeCun et al. (1998). The link is here.

Join me next time as I cover the activation function and try to invent another imaginary thrill-seeker to illustrate the processes there.

Happy learning.