Jon Krohn: 00:00:00

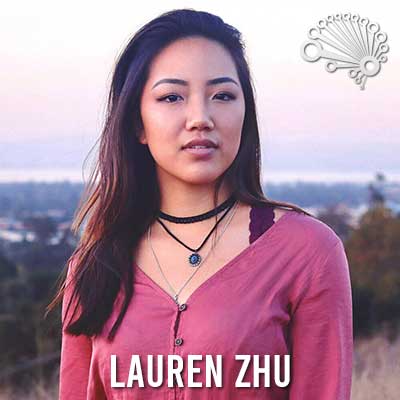

This is episode number 549 with Lauren Zhu, software engineer at Glean.

Jon Krohn: 00:00:12

Welcome to the SuperDataScience Podcast, the most listened to podcast in the data science industry. Each week, we bring you inspiring people and ideas to help you build a successful career in data science. I am your host, Jon Krohn. Thanks for joining me today, and now let’s make the complex simple.

Jon Krohn: 00:00:43

Welcome back to the SuperDataScience Podcast. We are fortunate today to be joined by the cool, fun, and brilliant Lauren Zhu. Lauren is a machine learning engineer at Glean, a Silicon Valley-based natural language understanding and search company that has raised $55 million in capital from name venture capital firms like Kleiner Perkins, and Lightspeed. Prior to Glean, she worked as a machine learning intern at both Apple and the autonomous vehicle subsidiary of Ford Motor Company, as a software engineering intern at Qualcomm and as an AI researcher at the prestigious University of Edinburgh. She holds both bat bachelor’s and master’s degrees in computer science from Stanford. And while at Stanford, she served as a teaching assistant, a remarkable five times for some of Stanford’s most renowned machine learning courses, such as Decision Making Under Uncertainty and Natural Language Processing with Deep Learning.

Jon Krohn: 00:01:38

In this episode, Lauren details what it’s like to study computer science and machine learning at Stanford. One of the most sought after universities on the planet to pursue computer science and machine learning. She tells us about her research on zero shot multilingual neural machine translation. She tells us why you should use principle component analysis to choose your job. The software tools she uses day to day at Glean to engineer natural language processing machine learning models into massive scale production systems and her surprisingly pleasant secret to both productivity and success. There are parts of this episode that will appeal, especially to practicing data scientists, but much of the conversation will be of interest to anyone who enjoys a relaxed laugh filled conversation on AI, especially if you’re keen to understand the state of the art in applying machine learning to natural language problems. All right, you ready for this awesome episode? Let’s go.

Jon Krohn: 00:02:39

Lauren, welcome to the podcast. I am so excited to have you here. Where in the world are you?

Lauren Zhu: 00:02:45

I am in San Francisco, also known as San Frandisco.

Jon Krohn: 00:02:54

People say that?

Lauren Zhu: 00:02:54

No, it’s just a song.

Jon Krohn: 00:02:58

Oh, so I guess you do a lot of clubbing. That’s what you’re out there in San Francisco for?

Lauren Zhu: 00:03:01

Not in the pandemic, but otherwise maybe.

Jon Krohn: 00:03:06

I bet that really put [crosstalk 00:03:07]-

Lauren Zhu: 00:03:07

Don’t worry about it.

Jon Krohn: 00:03:07

That must have put a big damper on your last chunk of time in school.

Lauren Zhu: 00:03:14

Oh yeah. Oh, I don’t want to think about it, but no. Unlocking some memories there that I tried to block out of my mind, but senior year, yeah. I think a chunk of that cut out, but it’s okay.

Jon Krohn: 00:03:27

Yeah. It’s getting better now. San Francisco must be basically completely up and running now.

Lauren Zhu: 00:03:32

I think so, but I haven’t visited the bars and clubs, so I wouldn’t know.

Jon Krohn: 00:03:36

Oh, really? Okay. All right. Not even restaurants. You haven’t been doing that?

Lauren Zhu: 00:03:40

I have been to restaurants.

Jon Krohn: 00:03:40

Taken advantage of that. There you go. All right. So that’s nice. And not everyone in the world has that. I just came from Ontario. I was there over the holidays, the Christmas holidays with my family. And while I was there, the entire province of Ontario, millions of people went into a hard lockdown. No gyms, no restaurants. It was 2020 all over again.

Lauren Zhu: 00:04:02

Oh, no.

Jon Krohn: 00:04:03

Yeah, in 2022. So, I’m sure there’s other places like that around the world. And so they’re jealous of your ability to go to restaurants, Lauren. Some day soon for the rest of you.

Lauren Zhu: 00:04:15

That’s their fault for not learning cooking. Sorry, I had to throw that [crosstalk 00:04:21]-

Jon Krohn: 00:04:21

Did you learn a lot of cooking through the pandemic or you had it beforehand?

Lauren Zhu: 00:04:24

Well, I definitely did in our lockdown. I had no choice, but to fend for myself and cook my groceries. I did do that a lot. Yeah.

Jon Krohn: 00:04:33

All right. Well, I don’t know you for your cooking prowess. Though, I’m sure it is no doubt exquisite. I know you because there’s an interesting twist over the internet. So, back in episode number 530, I did a five minute Friday episode on 10 AI thought leaders to follow. And one of my AI thought leaders was Professor Christopher Manning of Stanford university. And he responded to the tweets as well as to the LinkedIn post that I made about that episode when it came out. And I got really excited because I have been a huge fan of his since 2017. So, I used to run pre-pandemic a deep learning study group in New York. We met regularly and we studied different materials. Sometimes it was textbooks. Sometimes it was course videos. And at that time, there were new videos from Christopher Manning’s Natural Language Processing With Deep Learning course available all of it on YouTube for free.

Jon Krohn: 00:05:32

And so, we studied that for several weeks for this deep learning study group. So, I knew who he was. I love his lecture style. I think he’s so funny. And so I got super excited and I was like, “Well, he just responded to my tweet and my LinkedIn post. Maybe I can get him on the show.” And so, I have emailed him and he responded affirmatively. So, I think at some point this year, we will have Christopher Manning on the show. But so I was doing research on him and I came across you as a TA in his course in 2021. And I loved everything about your background as the audience is going to see in this episode. It’s super interesting. I think you’re going to be very inspiring for a lot of listeners and people are also going to learn a ton from you. I think I am going to learn something from you in this episode.

Jon Krohn: 00:06:23

So, when you were TAing his course, you were doing a masters in computer science at Stanford university. And that course, as I already mentioned, was called Natural Language Processing With Deep Learning. You were a course assistant. So what’s that like? Is it as wonderful working with Christopher Manning in person, as it seems like over YouTube?

Lauren Zhu: 00:06:45

I’d like to tell you it’s actually even better. He’s wonderful. I mean, obviously, very well known, has put out a lot of really incredible work fundamental to NLP as we see it today. And yeah, getting to work with him is awesome, and the students in the course are also really amazing. So, it was a great experience.

Jon Krohn: 00:07:07

I am so jealous. So tell us about the course content. I could base it from my notes from the deep learning study group in 2017, but I am sure you could do a better job having more recently been a course assistant on it.

Lauren Zhu: 00:07:25

Yeah, totally. So in the course, we have 10 weeks to go over a lot of stuff because the Stanford quarter system is pretty brutal there, but we start with ground up fundamentals and then we get into more machine learning type things. And so we start with word vectors, word representations. And then we cover back prop and neural networks, and how those work. And then we cover the history of NLP and deep learning, too. We start with RNNs, which are recurrent neural networks. And then we get into transformers, more modern day things, different applications of NLP and deep learning. We have machine translation. We have question answering, natural language generation, a lot of really cool stuff. And we also talk about the big models of today Bert, GPT, et cetera. And then we cap it off with a project where students get to work on whatever they want. And that’s really cool to see what they produce.

Jon Krohn: 00:08:35

Nice. That sounds incredible. And if my memory serves and I’m going to put this in the show notes if I can find it. While these lectures are no longer available on YouTube. So, I mean the historical ones are. So, the 2017 ones that I studied back then, pre-transformers. That wasn’t a term. Well, I guess it was a term, but mostly for describing cars that turn into robot people. And so, those lectures are still available online, but for the latest stuff, I believe you now have to pay, but I think it’s a small amount. And so, I’ll dig that and I will include it in the show notes if I can find it. So, everybody, I think who’s listening to this should be able to find that and follow along with all of the course materials that you just mentioned, which sounds amazing. Word vectors, obviously hugely powerful, enormous wide range of applications today.

Jon Krohn: 00:09:35

Recurrent neural networks, transformers, which are deep learning techniques for handling natural language information and building models with it. And then getting into specific applications like the ones you mentioned could be really the cutting edge of natural language processing today. Machine translation, question answering systems, natural language generation. And yeah, of course, these state of the art today architectures like BERT and GPT-3 are super cool to learn about. So, sounds like an amazing course, but it isn’t the only course that you TAed at Stanford. So you were also a course assistant for, From Languages to Information. So from my understanding, it sounds like that could be kind of like a precursor to this NLP with Deep Learning course?

Lauren Zhu: 00:10:21

That is totally correct. And the numbers line up that way, too. So, From Languages to Information is 124, and then NLP and deep learning is 224. So, it’s really beautiful there. Yeah, I took this course before I took the other course as well. I loved both so much. I ended up TAing both of them. Yeah, so in this course we talk about things that are a good intro to NLP, really. So what is edit distance? What is text processing? Language modeling, models like naive bays. And then we dive in more to information retrieval and then there’s a little segment on neural networks again. And then it gets more interesting at the end, too, with different applications. So we have chatbots, recommendation systems and those kinds of things.

Jon Krohn: 00:11:12

Super cool. That sounds like what I need to take. Having already intensively studied because Christopher Manning’s CS 224 and the NLP with Deep Learning course that you talked about and actually a lot of that content is in… So, my book Deep Learning Illustrated, the NLP content in there is heavily influenced by his CS 224. So, I’m already like pretty familiar with that. I’d love to refresh and get up to date on stuff like the transformers, and just hear about the latest that’s going on in there, but this course a lot of the topics that you’re mentioning, I feel like I have enough of a working understanding of them that I can muddle my way through it, but I’d love to understand it more deeply. And so, I’d love to study this CS 124, From Languages to Information. That sounds awesome. All right. But that’s not it, you’ve still TAed other courses as well. So you were also a course assistant for Hacking the Coronavirus, which sounds like probably a new course.

Lauren Zhu: 00:12:04

Yeah. That definitely was not a thing in 2019. It was spurred up immediately as it happened as the pandemic started. And we just sort of gathered together a bunch of students that wanted to make an impact and try to help people go through the pandemic. We had all different kinds of projects, really. We had people working on deep learning for trying to get the right protein folds for vaccines and Coronavirus, like the actual virus, and that was really cool. We had people working on grocery delivery kinds of things, a huge variety. Dashboards for numbers. It was really cool to see the diversity in what people came up with.

Jon Krohn: 00:12:57

That is really cool. And you are also… So, I don’t even know how you had time because the masters is what? It’s two years long?

Lauren Zhu: 00:13:05

So, it varies. It’s supposed to be two years long, but we do this thing if you do your undergrad there, and do what’s called a co-term you can do a fifth year masters, basically. So, you can finish it up in one year. So, it’s a really good deal.

Jon Krohn: 00:13:24

But you did it in two?

Lauren Zhu: 00:13:26

I did it in one.

Jon Krohn: 00:13:27

You did it in one? And in that time, how did you… You TAed, because I hadn’t even gone to the last one. So you TAed four different classes. We’ve only covered three of them so far. And one of them you did twice. You did that in a year?

Lauren Zhu: 00:13:41

Yeah, it was I had a little overlap with my senior year.

Jon Krohn: 00:13:46

Wow.

Lauren Zhu: 00:13:46

So, cheating a little bit. So, I started TAing in my senior year. I got too excited, I wanted to teach.

Jon Krohn: 00:13:53

That’s incredible. Okay, so we’ve covered three of the four courses that you TAed already. So, NLP With Deep Learning, you did From Languages to Information twice, you did Hacking the Coronavirus and you were the head TA, which I assume you must get a really special, I don’t know, outfit or something for that.

Lauren Zhu: 00:14:12

I got to wear a special hat.

Jon Krohn: 00:14:16

It’s not true, is it?

Lauren Zhu: 00:14:17

No, no. I wish.

Jon Krohn: 00:14:22

Many thanks to the master of data science program at the University of California, Irvine, for sponsoring today’s episode. The UC Irvine master of data science program blends statistics and computer science principles with partners from industry to empower students to innovate in the field of data science. Located on the tech coast of Southern California, students of the program will enjoy a powerhouse ecosystem where over a third of Fortune 500 companies are located. Take a giant leap in your data science career through the UC Irvine master of data science program. To learn more head to www.superdatascience.com/uci. That’s www.superdatascience.com/uci, check it out. But you were a head TA of decision making under uncertainty. So, yeah, what’s the difference between being a head TA and a regular course assistant, and then tell us about that particular course.

Lauren Zhu: 00:15:19

Totally. So being head TA means you get a boss around all the other TAs.

Jon Krohn: 00:15:25

Mark faster.

Lauren Zhu: 00:15:27

I am just kidding. It’s great. Yeah, so as a head TA of that class, it was really about making sure things ran smoothly and that could put out all the small fires that would always happen when running a course that has hundreds and hundreds of students. So, that was a large part of it. But the Professor Mykel Kochenderfer, he is the boss, the expert. He knows all about the material and making sure students are understanding everything. I’m kind of like his sidekick.

Jon Krohn: 00:15:58

Sweet. And then, so I guess that’s some probability theory course in a way?

Lauren Zhu: 00:16:04

Yeah, so the course is about, I guess, taking a computational perspective on how to build decision making systems. So, a great example of that is autonomous systems like robotics, autonomous vehicles, that kinds of thing. So, there’s patient networks, dynamic programming, reinforcement learning, [inaudible 00:16:29] decision processes, just to name a few.

Jon Krohn: 00:16:32

Wow.

Lauren Zhu: 00:16:33

Yeah, really cool applications there.

Jon Krohn: 00:16:34

I want to go back to school. Sounds awesome. So, clearly you had an outstanding masters. What was the rest of, in addition to TAing a total of five courses in a little over a year? What else is it like doing a master’s in computer science? I mean, we won’t talk about the time under lockdown, but other than that, I mean, what’s it like. What’s it like studying at Stanford? Is it fun? Is it interesting? Is it hard?

Lauren Zhu: 00:17:09

I think you nailed it. I think it’s all those things. I mean, the professors here are amazing. The students that you get to work with like when you work on a group project, everyone here is so passionate about what they study, and it makes it all really fun. And one thing that I also really love about Stanford is that they encourage you to explore all different kinds of things. So even if you study computer science in undergrad you have so many opportunities to pursue other interests as well, things that might not even be related. And so, you take with you all these different life skills and things that excite you and you use that in your life, you use that in your job, you use that in whatever, and that’s probably my favorite thing.

Jon Krohn: 00:17:56

So cool. And that wasn’t your only degree at Stanford. You also did a bachelor’s in computer science. You alluded to this because you mentioned how if you do a bachelor’s… Is that in all programs or is that something computer science specific where you can roll it right into an extra master’s year?

Lauren Zhu: 00:18:13

I think it’s a lot of the programs. I think what we do is we have different bachelors and master’s degrees available, but for the most part there is that fifth year option.

Jon Krohn: 00:18:27

Cool. I like that a lot. That is something that I really liked about Oxford University where I did my PhD. They have a similar kind of thing where a lot of the bachelors programs, especially if they’re in science or engineering, you can do a fourth year that… So, in England, undergrads are three years not four. And then, so the fourth year ends up being this master’s year and you get to do more project-based stuff. You get to get really deep into the research on something. I think that that’s such a cool thing and it would make sense to me that they have more and more of those all over the place. So, in your bachelor’s, did you always know that you wanted to do that extra masters?

Lauren Zhu: 00:19:09

I would say yes. I loved everything that I was learning. I feel like I’m a lifelong learner and if I can be in school forever, I would consider that.

Jon Krohn: 00:19:20

Totally.

Lauren Zhu: 00:19:21

It’s just I needed to take more time to take more classes and explore it. Also, have the chance to TA as well.

Jon Krohn: 00:19:29

Nice. And yeah, in addition to TAing in your masters and I guess the end of your bachelor’s there, you did tons of extracurricular stuff. You were on the Stanford jump rope team.

Lauren Zhu: 00:19:41

Oh, my gosh. Yeah, my favorite.

Jon Krohn: 00:19:45

You were co-president of Women in Computer Science. That sounds like it had a fair bit of responsibility, and you also do a fair bit of things on the side. I can see from your website, you are a wonderful singer and guitar player. Yeah, so you clearly have a lot of side hobbies and I think we’ll get to that later as it’s paradoxically part of your productivity technique as to have these extra things on the side. But yeah, I don’t know if there’s anything in particular you want to talk about there, but maybe the co-presidency of Women in Computer Science, what is that, how is it helpful for women at Stanford?

Lauren Zhu: 00:20:26

Yeah. Universities are 50/50 for the gender split, but in engineering often it’s not the case. And so, there’s always a need to empower minority groups, and not just women, but all different kinds of minority groups. And that’s something that I was really passionate about for sure. And trying to take that to industry and to other parts of my life as well.

Jon Krohn: 00:20:56

Nice. So, I love that idea. How practically do we do it? How do we empower minority groups?

Lauren Zhu: 00:21:03

Yeah. I mean, there’s a lot of different things. It’s the network. It’s the support group, providing opportunities, providing different ways to access things out there, really. Awareness, all different kinds of things.

Jon Krohn: 00:21:18

Nice. I love it. So, in addition to all of those things that you did at Stanford in a way, you also, when you had your summers off, you made enormously good use of them. So, you were a software engineering intern at Qualcomm. You were an AI researcher at the University of Edinburgh. You were a machine learning intern at a place called Greenfield Labs, which if people haven’t heard of Greenfield Labs, it’s a subsidiary of Ford Motor Company working on autonomous vehicles. And you were also a machine learning intern at how do you pronounce that, Apple? Oh, oh, Apple. Right, right, right. I’ve heard of them.

Lauren Zhu: 00:22:03

That’s how you pronounce your stock ticker.

Jon Krohn: 00:22:09

So, clearly you have a ton of experience in really interesting roles at top companies and universities all over the world. And we haven’t even talked about that you’re now a software engineer at Glean, which is a really cool venture capital backed startup in Silicon Valley. So, I’d love to hear one or two interesting use cases from the work experience you already have. In particular, I spend time during my PhD at the University of Edinburgh doing AI research. So, while I was at Oxford, I collaborated with researchers there. And while this might not be something that’s super well known, but the University of Edinburgh is one of the top AI programs in the world and has been for decades. So, I’d love to hear about the work that you were doing there.

Lauren Zhu: 00:22:57

Yeah. That was a really fun experience. Not just because it was in Scotland and the sunset at 10:00 PM every day.

Jon Krohn: 00:23:04

You were there in the summer.

Lauren Zhu: 00:23:05

I was there in the summer. Oh, that was great.

Jon Krohn: 00:23:08

Yeah. That is very lucky because if you’re there in the winter it’s setting at 02:00 every day.

Lauren Zhu: 00:23:12

Oh, yeah. And raining every day. Yeah, but I would go hiking at night, which gave me energy to do some really cool research during the day. And I worked with Professor Rico Sennrich and we worked on zero shot… Sorry, this is mouthful. Zero shot multilingual neural machine translation.

Jon Krohn: 00:23:37

Nice. Got it.

Lauren Zhu: 00:23:37

Did I say that right? Yeah, I said that right.

Jon Krohn: 00:23:41

Yeah, you nailed it.

Lauren Zhu: 00:23:42

Yeah. So, we take that apart. Machine translation is like Google Translate. It’s one language to another. Neural machine translation is that, but with neural networks. Multilingual machine… Oh, shoot. I messed it up. Multilingual neural machine translation is NMT, the other part, but with multiple languages meaning you have one model that does all different directions of machine translation. So, English to French, German to Chinese, whatever, et cetera.

Jon Krohn: 00:24:19

How many different languages can one model do?

Lauren Zhu: 00:24:24

It can do as many as it wants with as much data that is provided.

Jon Krohn: 00:24:30

Wow. Okay. Wow.

Lauren Zhu: 00:24:30

Yeah. And then we have the last one zero shot, which means you can translate from one language to another without any supervised data from that first language to the second language.

Jon Krohn: 00:24:46

Wow, crazy.

Lauren Zhu: 00:24:47

So, it’s very hard. It’s definitely a task that it’s being worked on actively, but the general technique at the time was to basically take whatever data you had before with the language pairs that you had. Let’s say you have English to French. Let’s say you also have French to German. If you train this model with these two language pairs, you wanted to actually translate all the way from English to German directly and actually magically all you have to do is provide some kind of token saying what language you start with, what language you want, and the model magically knows. It’s just going to do that, and secretly behind the scenes. Oh yeah, go ahead.

Jon Krohn: 00:25:37

Yeah. So you have, let me just repeat back, that I’m making sure I understand this. So, if you have an English to French translation, some kind of English to French translation dataset, I guess, and then a French to German translation, you could with this multilingual model go straight from one end to the other avoiding French entirely in the middle. So, it goes straight from English to German.

Lauren Zhu: 00:25:59

Yeah. That’s exactly correct. And what’s happening behind the scenes is the model is learning this universal embedding, this universal interpretation of language. And so, if you know how to translate out of English into French, if you understand French, you also understand this universal concept of language. So then you put German at the other end, you can just take the whole French part out of it and translate from English to some universal understanding and then into German. And so, it is a very cool and beautiful thing.

Jon Krohn: 00:26:39

So, there’s this universal understanding of the concepts in the middle. So a high dimensional embedded representation of meaning.

Lauren Zhu: 00:26:49

Exactly.

Jon Krohn: 00:26:49

Oh, cool. And then, so that’s how it works theoretically with any languages. You just take some input language, you turn it into the universal representation, and then you can spit it out into whatever target language you want.

Lauren Zhu: 00:27:05

Yeah, exactly.

Jon Krohn: 00:27:06

Easy.

Lauren Zhu: 00:27:08

What’s really cool about this is it helps a lot for what we say the low resource languages. Languages that don’t have a lot of training data. So let’s say you only have English to alien language, but if you want to translate German to alien language, there’s no way to do that with a regular model, you need this multilingual model for that.

Jon Krohn: 00:27:27

Cool. All right. Man, I can’t wait until the future when we need to be speaking alien languages. How far off are we from that?

Lauren Zhu: 00:27:35

Can’t tell you.

Jon Krohn: 00:27:37

I loved your explanation of zero shot multilingual neural machine translation, and I loved the way that you worked your way backwards through that definition from machine translation to neural machine translation, to multilingual NMT, to zero shot multilingual NMT. It was very easy for me to follow that. That was a clever way to do it.

Lauren Zhu: 00:27:56

Thank you.

Jon Krohn: 00:27:56

All right. So then how about more recently you’ve been at Glean since the summer of 2021 full time as a software engine near. So what are you up to over there?

Lauren Zhu: 00:28:08

This company is great. This product that we’re building is something that I realized that I needed for a long time. So what we’re doing is building a search for your work. And as a listener, you probably maybe have encountered this, but you’re trying to work on something. You don’t know anything about it. You don’t know if it’s been updated, who’s been touching it, what it relates to. You want to find all the tickets about it. With Glean, you can just search and find all the results within your company for all the data sources that you use like Google Drive, Slack, GitHub, JIRA, whatever. It’s a hard thing to do. It’s a hard problem. And there’s a lot of ML and natural language understanding that goes into that as well. And that’s-

Jon Krohn: 00:29:05

I bet.

Lauren Zhu: 00:29:05

That’s what my team works on in search intelligence. And so, we’re doing the ML and NLP side of things.

Jon Krohn: 00:29:12

Super cool. So the way that you describe that there, it sounds like you’re searching through documents mostly, but could this also work maybe in the future through your data sets? Like as a data science researcher, you could use some future Glean tool to just find the right data across a whole bunch of different databases or something like that?

Lauren Zhu: 00:29:36

Yeah. I mean, that’s a really interesting use case, and we’re definitely starting from the ground up with documents, but as we grow, we’re going to be tackling all different kinds of aspects of search and understanding.

Jon Krohn: 00:29:49

Cool. Struggling with broken pipelines, still dashboards, missing data. You’re not alone. Look no further than Monte Carlo, the leading end to end data observability platform. In the same way that Neuralink and Datadog ensure reliable software and keep application downtime at bay, Monte Carlo solves the costly problem of data downtime. As detailed in episode number 499 with the firm’s brilliant CEO, Barr Moses, Monte Carlo monitors and alerts for data issues across your data warehouses, lakes, ETLs, and business intelligence, reducing data incidents by 90% or more. Start trusting your data with Monte Carlo today. Visit www.montecarlodata.com to learn more.

Jon Krohn: 00:30:41

I love that idea and I can see how it would come in super handy. I was actually… Recently, I was trying to find a document that I knew I’d created in April of last year, but I couldn’t remember. I use a lot of different word processing tools for creating documents or slides. I’m always jumping between different applications to do it because if it is going to have a lot of math and I want to do it in LaTeX, but if it isn’t then I can probably make it faster in Google Slides, for example, or Google Docs. So, I am constantly jumping between different applications for different purposes, but then a year later, I can’t remember which one I picked. [crosstalk 00:31:23]. And I’m like, “Oh, well, but I must have shared it with this person.” Oh, but then how did I share it with them? Did I email it to them? Did I Slack it to them? And so you just have this tree of possibilities. And so, yeah, it makes finding documents really hard. And then what if the title I gave it was really dumb relative to the content that’s in it. So, yeah, I would love your tool.

Lauren Zhu: 00:31:48

Yeah. It’s so great because then you don’t have to worry about any of that anymore. You think you know maybe a keyword or two words and it’s not just keyword-based search. It’s also understanding-

Jon Krohn: 00:32:01

I’m not surprised given everything you’ve said so far that you wouldn’t be working in a company that was doing this for keyword words.

Lauren Zhu: 00:32:07

Yeah. So, it’s really fun to work on direct applications of all these cool NLP things into something that people can use every day and will use every day.

Jon Krohn: 00:32:18

Nice. I love it. So what does that mean to be on the search intelligence team? You’re a software engineer on it. What other kinds of roles are on that team and then how does it interface with other parts of the company?

Lauren Zhu: 00:32:29

Totally. So, in search intelligence, we are part of the brain behind how the search algorithm works. We have the query understanding and document understanding side of things, and then we have ranking. So, once you find documents, how do you actually rank them? And in order to the user, that’s also really important. So, search is, it’s a really complicated problem with all different kinds of component and focusing on the NLP is definitely what I can contribute to. And there’s so many ways to apply things like named entity recognition, spellcheck, question answering, document summarization, all these cool things that you see in papers and things. We’re working on that here.

Jon Krohn: 00:33:25

Yeah. You’re implementing it at scale. So you’re taking academic ideas, things that have been published in papers and maybe somebody put a GitHub repo together that shows how to do it on a demo data set, but then you’ve got to take these ideas, blend them all together, engineer them together, and then do it at a massive scale.

Lauren Zhu: 00:33:47

Yeah. That’s what I’m trying to figure out how to do, too. I just started. Yeah, one thing that’s really interesting is you learn about these techniques, and how they work in an academic setting, and then you try to apply them in industry, and it’s very different. It’s very different because the data’s dirty or that you have to clean the data, right? You have to put it in some pipeline that runs at weird times and it has to be in sync with everything else. It’s definitely more challenging, but really exciting part of the fun.

Jon Krohn: 00:34:19

Yeah. So I have more questions coming up for you about what day to day life is like for you at Glean and what kinds of tools you use and approaches you use. But just before we get there, I have a couple other questions for you. So, I see that you are something called a Contrary fellow. So what does that mean and how does that compliment what you do in your full-time job?

Lauren Zhu: 00:34:41

Yeah. So, Contrary is actually a VC based here in San Francisco. And a couple years ago they spun up something called a Contrary Talent Network. I don’t know if it was renamed, but I think it’s still that. And they just gathered around a group of 100 or so people that are about my age, like college grads who are really interested in tech and entrepreneurship and we have different events together. We hang out, we talk, we have we a Slack channel where we just blast whatever we’re interested in all the time. And it’s just a very engaging, exciting network to have, to keep everyone interested, and excited about what they do. I learned so much from these people as well. It’s an awesome network.

Jon Krohn: 00:35:35

I love that. So, if there’s a listener out there who’s interested in being involved in this fellowship, how do they apply?

Lauren Zhu: 00:35:42

Yeah. So, there is a… Yeah, if you Google Contrary talent or Contrary fellowship, it’s contrarycap.com, and there’s a way to apply, and there’s an annual cycle. So, it might currently be closed, but it’ll definitely have a new cohort in the next year.

Jon Krohn: 00:36:01

Nice. Super cool. So annual cohorts, makes a lot of sense. You can do onboarding altogether, get to know each other.

Lauren Zhu: 00:36:06

Totally.

Jon Krohn: 00:36:07

Is there a in-person component maybe in the future, in a post-pandemic world?

Lauren Zhu: 00:36:12

Yeah. So, the first cohort started of course in 2020 when no was able to meet anyone. So, that had its challenges. But since then we’ve had some in-person gatherings. Definitely, if/when very depressing, but if/when the pandemic gets better there will be more in person things for sure.

Jon Krohn: 00:36:34

That reminds me, I recently watched… I don’t think I’ve ever mentioned this on air, but I’m a huge South Park fan. And they recently released a movie that is available only on Paramount+. So, I had to get the free Paramount+ trial for a week just so I could watch this movie, and I did cancel it. I’m very good about setting reminders to not get sucked in to the auto subscription, the auto renewal. And so, this movie, this isn’t a spoiler alert because you see it right at the beginning of the movie is so South Park, the show’s been around for decades and like all cartoons, they always remain at the same age.

Jon Krohn: 00:37:13

So, they’re always, I don’t know, fourth year, fourth graders, fifth graders. I can’t remember, but they’re kids in elementary school. In the movie, the movie starts with, “Oh, the pandemic is finally coming to an end,” and all of them are grownups. So, they’re in their forties or fifties, and the pandemic is just starting to come to an end. And it makes for a really fun premise for the movie. They did such an amazing job. To call it a movie was totally merited. They put a lot of effort, not only into the animation, but also the storyline above and beyond what they would do for an episode in addition to just the length. And so, yeah, this really fun thing. If you’re into South Park, I do recommend getting a Paramount+ subscription for one week to check it out.

Lauren Zhu: 00:38:08

Don’t forget to cancel it.

Jon Krohn: 00:38:09

Don’t forget to cancel it. Exactly. So, all right. So, I didn’t expect to be talking about South Park with you, but so yeah. So, another thing that I wanted to ask you before we get into your day to day stuff at Glean is I know that you wrote a blog post about how you picked your job. So, maybe you could let us know how you ended up choosing Glean from no doubt the myriad opportunities that were available to you as somebody who had a master’s in computer science or was about to have a master’s in computer science from Stanford, had TAed tons of the most well known data science machine learning classes on the planet, and had already done internships at the likes of Apple, Ford, University of Edinburgh, Qualcomm. So you must have had your pick of opportunities. And I know that you wrote a blog post that invoked data science to try to come to an answer. So, why Lauren, should you run a principal component analysis to choose your job out of college?

Lauren Zhu: 00:39:16

This is both the nerdiest and most philosophical thing ever, but I feel like this is the best way to decompose out and separate all the rush and all the complicated thoughts that one may have and the terrible, terrible process of recruiting with fundamentally what is important. So for me, when I was going through recruitment, I was overwhelmed with the pandemic happening, not knowing what I wanted, all these opportunities that I could possibly go with. And in order to make actual progress in my decision making, I had to run a PCA. I had to take the 100 dimensions, the 100 different factors as you could say of one job being better than another and condense it down to five.

Jon Krohn: 00:40:14

All right. Okay. So, let’s take one quick step back here.

Lauren Zhu: 00:40:18

Totally.

Jon Krohn: 00:40:18

For listeners who are unaware, PCA is a technique that we can use to… Yeah, as Lauren just said to reduce the dimensionality of dataset. So, if you had, and I’m starting to think… I was immediately staggered by the dataset that you might have for this. So, you start with, so each row would be a different job opportunity. So, maybe like a company name, and then you actually had 100 columns for each row?

Lauren Zhu: 00:40:46

Disclaimer, there’s no actual PCA with numbers that was done. This is a-

Jon Krohn: 00:40:55

It’s philosophical PCA.

Lauren Zhu: 00:40:56

… philosophical PCA. Yeah, so if what’s keeping you at night is the 100 rows, I guess the 100 dimensions, K equals 100. In your mind, just filter it down to top five. Sorry, there’s no numbers involved. One could, if one wanted to you could totally run. You could totally do that.

Jon Krohn: 00:41:27

That could actually potentially be a great project to show all of the jobs that you’re applying to. You’re like, “I need to explain.” So, you end up here on this first principal component and here on the second principal component. And as you can see that positions you favorably relative to these other firms.

Lauren Zhu: 00:41:42

That’s so funny. Yeah, but basically like you think about compensation, you think about the people you work with, you think about the location, you think out all these different things. Really just pick three to five things and then choose the place that meets those criteria the best.

Jon Krohn: 00:42:02

Right. And so, you’re choosing the principal components, the ones that you think explain the most variance in your happiness on the job, I guess?

Lauren Zhu: 00:42:10

Totally should be about happiness. All always choose happiness. Yeah, so that’s what I did, and it worked out for me. I ended up at Glean and I think I chose my principal components correctly. It was great.

Jon Krohn: 00:42:26

Awesome. What were yours? What were your three to five principal components?

Lauren Zhu: 00:42:30

Totally, I would say the people was huge for me, and in the previous internships I had, I loved my teams. I loved the things that I was working on. I wanted to be around a diverse group of people, sort of like-minded, passionate about the project, the product that they’re working on. When it came to technical work that was another huge one. Working on NLP was really important for me, NLP and industry, ML and industry, and also having autonomy over my projects. I’m the first new grad on my team. And so, I’ve been pleasantly surprised at how much autonomy I’ve been given to work on what I do, and that’s been a huge amount of fun, and also just an amazing company with work culture, mission, where I’m happy to go to work every day.

Jon Krohn: 00:43:26

So cool, Lauren. I’m so glad that you found it. So, we’ve talked about Glean a little bit. You’ve explained what you do on the search intelligence team, and you’ve talked how that interrelates with other parts of the business. What is the day to day like for you at Glean? What kinds of tools do you use?

Lauren Zhu: 00:43:44

Totally. For sure. As a machine learning engineer, there’s a lot of different things that happen because you have to understand the stack and the systems. So, first and foremost, I’m a software engineer and even if I’m not training models, I’m helping to connect different parts of the backend with different features. Things that relate to NLP as well. And when you have an idea, getting that out into a product is a long process as well because you have to create the idea, think about how you would do it, start sandboxing, get numbers about how it’s doing, think of ways to make sure that it’s good and then you have to thoroughly test it. Then you have to test it on we say, deployments. Test it on customers, then you can land it. And so, for that, that requires a lot of different tools. I do a lot of sandboxing in Google Colab, actually. You can link up a local backend. And so, it’s a really nice notebook way to do that. That’s one of my favorite things. And then for offline pipe lines, we also use Apache Beam, which is similar to Spark working with big data. So, proficiency in that is also really, really nice.

Jon Krohn: 00:45:23

Cool. Nice super cool tool choices. Lauren, I love using Colab myself. So, if people do online courses with me, I very frequently use Colab. I haven’t before used it attached to a local backend, as opposed to just being on the internet, using Colab Notebooks. That sounds super cool. Something I’d love to try out.

Lauren Zhu: 00:45:44

That was my first time using it too in industry, also.

Jon Krohn: 00:45:47

Oh, yeah?

Lauren Zhu: 00:45:47

Yeah. It’s a really cool thing.

Jon Krohn: 00:45:49

Yeah, it does have… So, for listeners who are familiar as Jupyter Notebooks, it’s similar to Jupyter Notebooks. It’s based on the idea of Jupyter Notebooks. So executing code, adding in markdown to annotate your notebooks, having plots right there in the notebook, but Colab does a really great job of providing you with information about all of the variables and methods in your code just by hovering over it. And so, that’s something that I could imagine would be really useful about using Colab.

Jon Krohn: 00:46:21

The only thing that annoys me about it, and there might be some way to customize this is that because I’ve been using Jupyter Notebooks for so many years before I got super used to all of the hot keys and some of them, many of them are the same, but some of them are different and they throw me off and there are a few key things, and I can’t now think of any off the cuff, but there are some things that I do all the time in Jupyter Notebooks that I haven’t figured out. And I think that there isn’t by default a Colab Notebook way of doing it. And so, I recurringly have this like, “Oh, I’m going to figure out how to customize this somehow.” And so, maybe this is it. If I can figure out how to have it running on a custom backend, maybe then I can also customize the hot keys in a way that I can’t on the internet.

Lauren Zhu: 00:47:02

Let me know if you figure that out.

Jon Krohn: 00:47:04

I will. I will be letting the whole world know. And then Apache Beam, very cool way to run processes over large amounts of data. Not one that I’ve used personally, but that machine learning engineers on my team have used. And so, I know that it can be a great choice in a lot of situations. Cool. So, Lauren, I think given your breadth of expertise working with lots of different kinds of students and already working in lots of different kinds of companies in your career, what skills are most important for people to possess today, whether they’re engineers or data scientists?

Lauren Zhu: 00:47:45

Yeah, I would say first and foremost, keep learning, always. Read the papers, read about what’s new and out there, and play around with it. Be a good engineer, be a good data scientist. And then also an alternative answer to that is to you alluded to this earlier, but paradox school, I would say pursue your passion projects that might not even be related to this at all. For me, one example actually is as I just moved here to San Francisco, I don’t have a lot of furniture in my house. I am going crazy about interior design, and I love arts, and I love photography and music. And so, now I’m just looking around for inspiration in the city for how to design my apartment. And that actually that gives me a lot of energy every day. That also helps me get out of bed every day, along with the cool things that I am doing at work. So the more that excite you the better, and that’ll make you happy. And if you’re happy, you’ll be a good data scientist. That’s my secret.

Jon Krohn: 00:49:04

I love that. You do seem really happy.

Lauren Zhu: 00:49:06

Thank you.

Jon Krohn: 00:49:06

You seem like a deeply happy person. It’s very enjoyable shooting this episode with you. I feel very lucky. All right, so awesome answers to that question. And that is not one that I’ve had before, a question like that, to pursue your passion projects because this energizes you for all aspects of your life, including your work life. And if you’re happier, studies show that you do think more creatively, you see more possibilities than if you are stressed.

Lauren Zhu: 00:49:33

Absolutely.

Jon Krohn: 00:49:34

So, I do believe that that is a great piece of advice, Lauren. So, I think that also covers the next question that I had for you about non-technical traits that make a great engineer. It seems like we just covered that.

Lauren Zhu: 00:49:48

Yeah. I mean, be creative, right? Think outside of the box and there’s no way that technology’s going to keep improving, algorithms, methods. They’re going to be the same unless people think outside of the box and that’s how stuff happens. So, I just want to emphasize creativity, everyone.

Jon Krohn: 00:50:11

More interior design, everyone.

Lauren Zhu: 00:50:13

Yeah. Do that.

Jon Krohn: 00:50:17

All right. And then it goes without saying that you are an extraordinarily productive person, so you have managed to do so many different things in parallel. Pursue, first a Stanford bachelor’s degree in computer science, then a master’s in computer science. In the bachelor years, you were doing things like being co-president of Women in Computer Science and traveling all over the world. And then during your master’s, you were TA of so many different class of a total of five classes. Now that you are working full time, you’re still finding time to be doing interior design, and pursuing other interests, music, art. So, how do you do it? What are your tricks for being so productive?

Lauren Zhu: 00:51:08

Yeah, I would say being healthy and resting yourself. This is kind of a similar theme, but having enough energy and mental space to learn because there’s no way that you can learn and be productive if you’re tired or if you’re stressed. And the hours that I work, I feel are very, very productive because my head is clear and I’m happy and I’m healthy.

Jon Krohn: 00:51:37

Right. Yeah, that’s a key thing. You see, there are some occupations out there. So, working in mergers and acquisitions and investment banking is an example or strategy consulting. There are these kinds of career choices where you hear about these crazy long hours. You’re traveling to the client somewhere else. You have to wake up at 04:00 in the morning to make your 6:00 AM flight. You land at the client’s city at 8:00 AM, and then your meetings till very late. And I personally, and there might be exceptions out there, but certainly for me, and I suspect for most people, a lot of those hours that you’re “working,” I can’t imagine they’re particularly productive or certainly innovative. I agree with you 100% that to be doing my best work, to be supporting my company as best as I can be, and probably listeners as best as I can be I need to be getting lots of rest.

Jon Krohn: 00:52:47

So, something that I’ve talked about in episodes before I use something called the Pomodoro technique to track tasks that I’m working on. And so, yeah, episode number 456, which aired in March of last year, I talked about this Pomodoro technique. And so, it time boxes, and I know… I think you’re going to talk about time boxing in a second, too, but it gives these 25 minutes sprints of work, and then you’re supposed to take five minute rests. Sometimes I’m really in the groove and I’m like, “No, I’m not taking a break. I’m doing another 25 minutes,” but then after two 25 minutes on a row I’m like, “Okay, it’s probably time to stand up and walk around.” And so, the very most that I can sustainably do in terms of number of Pomodoros in a day is if I can do 16 in a five day work week, I am gassed.

Jon Krohn: 00:53:41

So, if you think about that, that’s really, that’s 25 minutes. Let’s call it half an hour to make the math simpler for me, doing it off the cuff. But so we’re talking eight hours of work, five days a week of focused deep work where you’re not distracted by phone calls. You’re not doing other things. You don’t have the TV on. You are focused on that task. And no matter what I do, if I try to reshape my life in some way to make that 20, it is unsustainable. I cannot. I will begin to have Pomodoros where I’m just like, “What am I doing here?” I’m just pushing information around or just not… I’m just distracted. I can’t stay on track. I certainly can’t think creative. So, I agree with you 100%.

Lauren Zhu: 00:54:28

Yeah, I mean, when I was a student, I felt the same thing and I would… My friends, some people would work on Saturdays and Sundays. And I’m like, “Why don’t you just work productively on the weekdays, take the weekend off, partially. Enjoy yourself and, and rest well, and then have that energy to work harder during the week?” Definitely, I am responsible for some of this too, but like on Saturdays I would try to work on during the whole day and really get nothing done. And then I would feel bad that I wasted my Saturday. So, really just making sure that you set aside time to rest and do the things that you love is so important.

Jon Krohn: 00:55:12

Yeah. You’re 100% right, and I through the pandemic, I have been worse than usual. I’m always… Yeah, I’m frequently trying to burn the candle at both ends, but through the pandemic I’ve been really bad at it, and you’re 100% right. I and probably a lot of listeners out there need to be better about having space for other pursuits, other passions on weekends and holidays and evenings, if you can make the time. And yeah, no doubt at the times that I do create that space I am so much more energized, productive, and creative in my workday. So, a good reminder for everyone no doubt.

Jon Krohn: 00:55:51

Okay. So Lauren, we’ve talked about your early career successes and how you achieve those successes, your productivity tips, which sound really pleasant ones because everyone wants to have more happiness in their life. So, given where you are in your career and given the incredible richness of experience that you already have with machine learning engineering, here’s a question that I love to ask. I don’t ask it all the time, but I think you’re going to have a really interesting answer, which is thanks to constantly exponentially cheaper data storage, exponentially cheaper compute costs, way, way, way more sensors everywhere collecting way, way, way more data. Interconnectivity, being able to do classes remotely. Papers being published in archive. As soon as they’re ready, GitHub code going up at the same time. Technology advances are happening at an exponentially faster pace each year. And there’s no place that we feel this more than in data science or machine learning. So, given that you are really at the beginning of your career, you haven’t even been working full time for a year yet. So you have decades of career ahead of you. What excites you about the future? What do you think could happen over these coming decades that will transform society?

Lauren Zhu: 00:57:11

Oh, my gosh, there is so much cool stuff happening now. And more things that I don’t even know will be happening in the future. But if you just take a look at some of the really amazing things that are happening now, the fact that I can just speak into my Apple watch completely normally. Not like, hello, my name is, but just how I normally talk, and it understands at the rate that I speak and transcribes things and can just send it to someone. That is really cool. Autonomous driving that who knows if that will pan out, but there’s really good progress so far. And I’m really excited to see how far that’s going to go. All these things can only happen with a lot of data and really powerful compute. And so, if you get more of that, you get more of these really cool applications, things that are just mind blowing, and I can’t wait to integrate more of those things into my life. I don’t even know what they’ll be. It’s really exciting.

Jon Krohn: 00:58:16

Yeah. I agree. And very cool. Those two examples you mentioned follow right on internship experience you have at Apple and Greenfield Labs. So, very cool examples, relevant examples. All right. So let’s fast forward all the way to the end of your career. So let’s say 40 years from now when you retire, what do you hope to look back on?

Lauren Zhu: 00:58:38

If you know the Myers Briggs personality test?

Jon Krohn: 00:58:42

Yeah.

Lauren Zhu: 00:58:43

It’s one of my favorite things. I think it’s crazy how four letters… Basically, it’s a personality test with four different letters, and each letter has, I guess, there’s two variations, right? So, there’s I and E. Just quickly explain it, but I is introverted. E is extroverted. Then the second slot is N and S. N is intuition, S is sensing. And then you have F and T, you have feeling and thinking. Then for the last lost you have J and P, you have judging and perceiving. And the fact that you can just take 16 combinations of these letters to describe a person is both really powerful. It’s definitely a little bit generalistic, but it’s really, really cool.

Lauren Zhu: 00:59:31

So maybe you can guess this, but mine is ENTP. So, I am extroverted, I am intuitive, thinking, and perceiving. If you look up those letters, you’ll find a nickname for it, which is the visionary. And I can definitely see that in myself. I might be idealistic sometimes in the way that I think about things and just how I operate, but looking back at the end of my career, I would love to see turning those ideas and turning those dreams into reality and acting on all the cool things that I’ve conjured up in my head. So, hopefully I can actually interior design my house the way that I’ve planned it in my head so elaborately. And when it comes to being an engineer, making the impact that I dream about every day.

Jon Krohn: 01:00:30

Cool. Yeah, that was such an unexpected, but beautiful answer. Yeah, I was like, how is this going to.. Oh, yeah.

Lauren Zhu: 01:00:41

There it goes.

Jon Krohn: 01:00:44

Nice. All right. So, given your rich and interesting experience, do you have a book recommendation for us that might expand our minds?

Lauren Zhu: 01:00:56

Yeah. One book that it’s one of my favorite books of all time. It’s called Thinking Fast and Slow.

Jon Krohn: 01:01:03

Oh, yeah.

Lauren Zhu: 01:01:05

It’s a classic. I’m sure a lot of you have read it already, but if you haven’t please check it out. It’s basically about how people think and how there’s two different ways of thinking. The first one is this very fast, quick, intuitive, you don’t even have to think thinking. And then the other one is what we tie more to academics and learning and storing memories into our brain. Like the very brain intensive way of thinking, and just how we use both in our daily lives and why they’re both important, how they interact. It’s really, really fascinating.

Jon Krohn: 01:01:45

Yeah, I absolutely really love that book. I hope to reread it soon. I’ve only read it once and it is densely packed with information from Daniel Kahneman Nobel Prize winning economist on cognitive biases and how there’s a lot of surprising evidence backed information on how you actually think relative to how you might feel you think. And while reading the book once does not rid you of all of the biases that you’re susceptible to, at least a little bit of awareness can make you able to mitigate some of the most pernicious biases. And yeah, I’d love to read it again to brush up and eliminate a few more.

Lauren Zhu: 01:02:32

Yeah, I think I might have to give it another read as well.

Jon Krohn: 01:02:35

Yeah. So, great tip, Lauren. I highly recommend that as well. So, how can people follow you or get in touch with you to hear what you’re up to on a regular basis?

Lauren Zhu: 01:02:47

Yeah, I am most active on Instagram. My handle is-

Jon Krohn: 01:02:51

All right.

Lauren Zhu: 01:02:52

Yeah, @heylauren, like the normal spelling, and then Z my last initials. That’s H-E-Y-L-A-U-R-E-N-Z. You can also add me on LinkedIn. That’s probably it, I would say.

Jon Krohn: 01:03:08

Nice. Yeah, we’ll include those in the show notes. Lauren, I have loved filming this episode with you today. I have learned so much as I said I would at the beginning of the episode it happened, and yeah, I feel like I had a great energizing life experience shooting this with you. No doubt lots of audience members have the same experience listening to you. And so, I would love to have you back on the show again sometime.

Lauren Zhu: 01:03:37

Thank you so much for having me. This was a blast. Absolutely fun.

Jon Krohn: 01:03:46

Lauren is so calm, cool, happy, and relaxed. I’m sure it was obvious that I had such a good time filming with her today. I hope you enjoyed our conversation too. I am deeply impressed by how much Lauren has accomplished in her career already, and I’d love her ability to explain deep technical concepts in such a straightforward, relatable way. In today’s episode, Lauren filled us in on what it’s like to study and TA some of the world’s most renowned machine learning courses at Stanford. You’ll be pleased to know that after filming with Lauren, I found a YouTube playlist with all of the winter 2021 lectures for the CS 224, Natural Language Processing with Deep Learning course. So, those epic lectures are indeed available for free after all. See the show notes for a link.

Jon Krohn: 01:04:31

Beyond Stanford, Lauren detailed the extraordinary AI research on zero shot multilingual neural machine translation that she carried out in Scotland. She talked about how natural language understanding enables exceptional search and rank capabilities at Glean. She talked about how the Google Colab and Apache Beam tools she uses day to day as a machine learning engineer bring NLP models to reality, and she let us know how paradoxically by resting and doing less work each week, you can ultimately end up being more productive and successful.

Jon Krohn: 01:05:04

As always, you can get all the show notes, including the transcript for this episode, the video recording, any materials mentioned on the show, the URLs for Lauren’s Instagram profile and website, and a YouTube video of her playing a UK ukulele on a mountain side with a dog as well as my own social media profiles at www.www.superdatascience.com/549. That’s www.superdatascience.com/549. If you enjoy this episode, I’d greatly appreciate it if you left a review on your favorite podcasting app or on the SuperDataScience YouTube channel. I also encourage you to let me know your thoughts on this episode directly by adding me on LinkedIn or Twitter, and then tagging me in a post about it. Your feedback is invaluable for helping us shape future episodes of the show.

Jon Krohn: 01:05:47

All right, thank you to Ivana, Mario, Jaime, JP, and Kirill on the SuperDataScience team for managing and producing another exceptional episode for us today. Keep on rocking and out there folks and catch you on another round of SuperDataScience very soon.